Over the past few weeks, I’ve rekindled my interest in Artificial Intelligence research, spurred by the recent buzz surrounding topics like ChatGPT’s release a few months ago. Although I delved into this field back in 2016, the rapidly evolving landscape has motivated me to revisit it and delve deeper.

My current focus revolves around delving into the intricate nuances of Neural Networks, delving into their inner workings at the lowest levels of implementation. This journey involves diving into a sea of mathematics, engaging in matrix multiplications, exercising patience, and of course, savoring plenty of coffee! Additionally, I find myself engrossed in working extensively with Python and TensorFlow—a powerful open-source Machine Learning platform crafted by Google.

Unveiling Visual Studio Code Dev Containers

Presently, my primary development environment centers around Visual Studio Code. Its lightweight nature coupled with its myriad extendibility options make it a standout choice. One particularly remarkable feature that Visual Studio Code offers is the concept of Dev Containers. These containers enable the execution of code and all its dependencies within a Docker container, all without leaving a trace on your host operating system.

The appeal here lies in the fact that no external dependencies are introduced to your host OS, maintaining its current state. This concept strikes a chord with the dedicated developer virtual machines I often employed more than a decade ago, albeit Dev Containers offer a more streamlined alternative to full-fledged VM installations.

Embarking on the Dev Container Journey

Now, let’s explore how seamlessly you can integrate TensorFlow into a Visual Studio Code Dev Container while seamlessly harnessing the power of a dedicated GPU from your host operating system. My primary workstation is an AMD Ryzen system boasting a robust 12-core 3900XT CPU, a NVIDIA GeForce 3080 Ti GPU, and a whopping 128 GB RAM. This mighty setup operates on the latest version of Windows 11.

Leveraging a physical GPU directly within a Docker container holds paramount significance, given that Machine Learning workloads are profoundly reliant on GPUs. The innate ability of GPUs to parallelize the training of underlying Neural Networks using dedicated CUDA cores (especially in cases involving NVIDIA graphic cards) amplifies their role in this domain.

Initiating your foray into Dev Containers for Visual Studio Code is straightforward. Start by creating a designated folder within your file system, and within that folder, draft a Docker file describing the specifications of the container Visual Studio Code will generate for you. Below is a glimpse of the Docker file:

FROM tensorflow/tensorflow:latest-gpu-jupyter RUN apt-get update RUN apt-get install -y git RUN apt-get install -y net-tools RUN apt-get install -y iputils-ping

As showcased, the Docker container originates from the tensorflow/tensorflow:latest-gpu-jupyter image—this comprehensive package encompasses all essential tools and dependencies essential for GPU-accelerated TensorFlow workloads. Additionally, my Docker containers consistently incorporate a few supplemental tools like ifconfig and ping, facilitating the diagnosis of potential network connectivity issues.

A pivotal component of your Dev Container setup resides within a folder named .devcontainer, containing the file devcontainer.json. The ensuing code snippet provides a peek into this file’s contents:

// For format details, see https://aka.ms/devcontainer.json. For config options, see the

// README at: https://github.com/devcontainers/templates/tree/main/src/docker-existing-dockerfile

{

"name": "Existing Dockerfile",

"build":

{

// Sets the run context to one level up instead of the .devcontainer folder.

"context": "..",

// Update the 'dockerFile' property if you aren't using the standard 'Dockerfile' filename.

"dockerfile": "../Dockerfile"

},

// Features to add to the dev container. More info: https://containers.dev/features.

// "features": {},

// Use 'forwardPorts' to make a list of ports inside the container available locally.

// "forwardPorts": [],

// Uncomment the next line to run commands after the container is created.

// "postCreateCommand": "cat /etc/os-release",

// Configure tool-specific properties.

"customizations":

{

"vscode":

{

"extensions":

[

"ms-python.python",

"ms-toolsai.jupyter"

]

}

},

"runArgs": ["--gpus", "all"]

// Uncomment to connect as an existing user other than the container default. More info: https://aka.ms/dev-containers-non-root.

// "remoteUser": "devcontainer"

}

This file functions as a manual for Visual Studio Code, orchestrating the establishment of the Dev Container. Among its versatile applications, you can stipulate automatic installation of designated Visual Studio Code extensions for your Dev Container. In my case, I integrate extensions such as ms-python.python and ms-toolsai.jupyter. However, the crux of devcontainer.json resides in the runArgs block.

Within this section, I specify the flag –gpus all for the Docker Container—a directive that funnels all available GPUs from the host OS directly into the Docker Container. Mastering this flag and its integration into devcontainer.json took some dedicated exploration on my part.

Navigating the Dev Container Terrain

Upon meticulously configuring your folder structure and the requisite files within your file system, navigate to Visual Studio Code and open the designated folder. Within moments, Visual Studio Code will prompt you to consider opening the folder in a Dev Container.

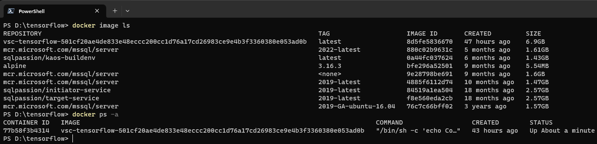

During the initial run, the requisite Docker image will be fetched from the internet, forming the foundation for the ensuing Docker container based on the Docker file’s specifications. While this initial setup entails a brief wait, subsequent openings of the folder within Visual Studio Code will reuse the previously established Docker container. As evident in the output below, you’ll encounter a Docker image named vsc-tensorflow… along with a live Docker container hinged on this image.

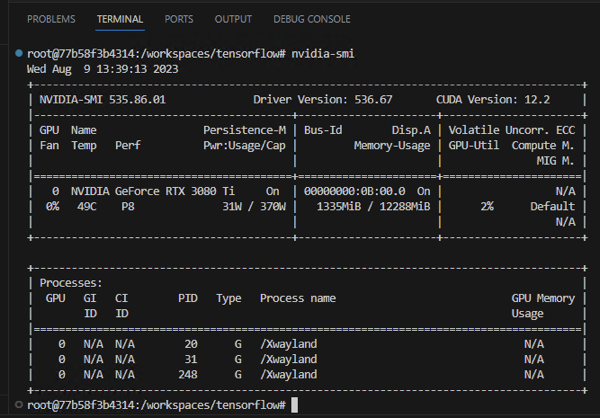

The interior of the Docker container even accommodates the utility nvidia-smi, allowing you to ascertain whether access to the GPU, directly channeled from your host operating system to the Docker container, has been successfully established.

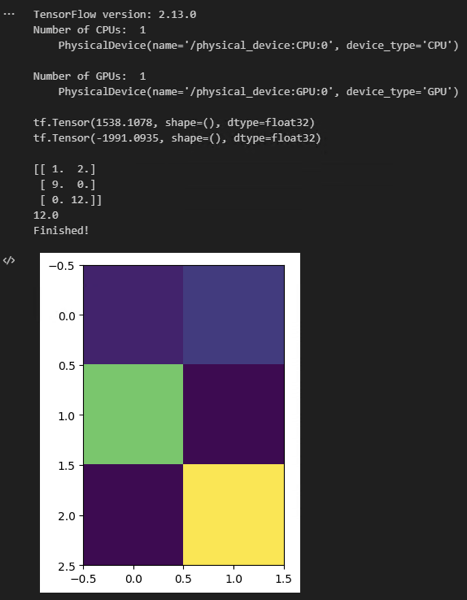

With the foundational steps executed, you’re poised to craft a rudimentary Jupyter notebook, leveraging Python to interact with your TensorFlow environment. Below, I showcase a snippet of code that fetches CPU/GPU information through TensorFlow, complemented by a handful of elementary tasks executed in Python to verify seamless functionality:

import tensorflow as tf

import numpy

import matplotlib.pyplot

# TensorFlow test

print("TensorFlow version:", tf.__version__)

physical_cpu = tf.config.list_physical_devices('CPU')

physical_gpu = tf.config.list_physical_devices('GPU')

print("Number of CPUs: ", len(physical_cpu))

for device in physical_cpu:

print(" " + str(device))

print("")

print("Number of GPUs: ", len(physical_gpu))

for device in physical_gpu:

print(" " + str(device))

print("")

print(tf.reduce_sum(tf.random.normal([1000, 1000])))

print(tf.reduce_sum(tf.random.normal([1000, 1000])))

print("")

# Array handling

array = numpy.zeros([3, 2])

array[0, 0] = 1

array[0, 1] = 2

array[1, 0] = 9

array[2, 1] = 12

print(array)

print(array[2, 1])

# Plot the array

matplotlib.pyplot.imshow(array, interpolation="nearest")

print("Finished!")

Executing this code yields the ensuing output:

Summary

Throughout this blog post, you’ve embarked on a journey delving into the establishment of a TensorFlow Machine Learning ecosystem within a Visual Studio Code Dev Container. As illustrated, the creation of such a Docker container, equipped to support Machine Learning workloads, is surprisingly straightforward. Furthermore, you’ve gained insights into the process of channeling a physical GPU from your host operating system to the Docker container—accelerating the training of your Neural Networks in the process.

Thanks for your time,

-Klaus

Note: this blog posting was copy-edited by ChatGPT – what do you think? 🙂