In today’s blog posting I want to show you how you can upgrade a locally deployed Kubernetes Cluster to a newer version. This seems to be a very simple task, but there are a few pitfalls that you have to be aware of.

Overview

First of all, you have to know that you can only upgrade your Kubernetes Cluster to a minor version which is one version higher than your current deployed version. Imagine, you have a Kubernetes Cluster with version v1.15 and you want to upgrade to version v1.19. In that case, you have to perform multiple upgrades – one after the next one:

- v1.15 to v1.16

- v1.16 to v1.17

- v1.17 to v1.18

- v1.18 to v1.19

A Kubernetes Cluster contains the Master Node(s), which runs the Control Plane components and the Worker Node(s), which are running your deployed Pods. The Control Plane consists of the following components:

- kube-apiserver

- kube-controller-manager

- kube-scheduler

- kube-proxy

- kubelet

On a Worker Node, the only deployed component (besides the Pods) is the kubelet. When you now upgrade your Kubernetes Cluster, you can upgrade these components individually, so that you have no downtime for your application. This also means that the above-mentioned components are deployed temporarily with different software versions.

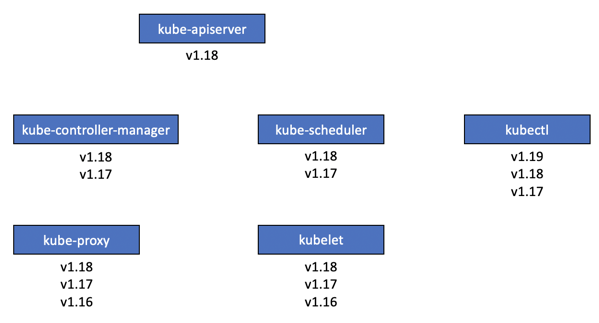

The kube-apiserver is the main component of a Kubernetes Cluster. Therefore, no other component of the Control Plane should have a higher deployed version than the kube-apiserver. The kube-controller-manager and the kube-scheduler components can be at one version lower than the kube-apiserver.

And the kube-proxy and the kubelet components can be at two versions lower than the kube-apiserver. One exception is here the kubectl utility, which can be one version higher or lower than the kube-apiserver. The following picture tries to illustrate this concept when you perform an upgrade to version v1.18.

In the following steps I will show you now how to upgrade a Kubernetes Cluster from v1.17 to v1.18.

Upgrading the Master Node

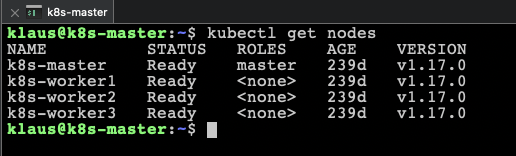

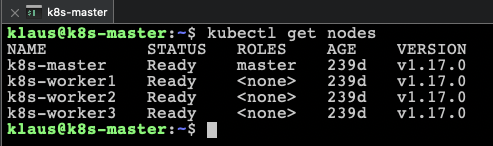

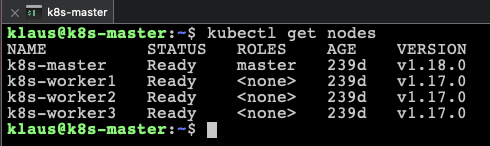

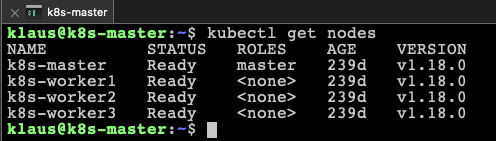

First of all, I’m beginning with the Master Node. Because I don’t have an HA deployment, my Kubernetes Cluster only contains a single Master Node, as you can see in the following picture.

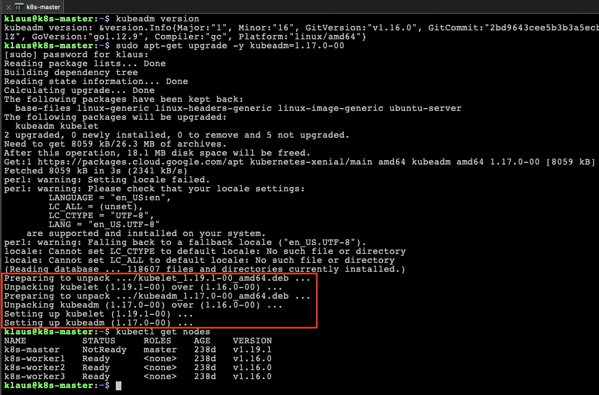

Caution: It is very important that you hold and unhold your deployed Kubernetes components with the apt-mark command. Otherwise you are getting into serious troubles, when you upgrade the kubeadm utility in the first step.

When you don’t hold the current version of the kubelet, the upgrade of the kubeadm utility installs the *latest* version of the kubelet, which is v1.19.1. And as you already know, this doesn’t work because of the version incompatibility that I have mentioned previously. I ran yesterday into that problem, when I initially upgraded my Kubernetes Cluster from v1.16 to v1.17.

As you can see in the picture, the kubeadm utility was upgraded to v1.17, and the kubelet was also upgraded to v1.19.1! After the upgrade the Master Node was in the state NotReady, because the kubelet had a deployed version which was just too high! Thanks to Anthony E. Nocentino, who helped me to understand and troubleshoot that specific problem! 🙂

Let’s start now by upgrading the kubeadm utility in the first step.

sudo apt-mark unhold kubeadm sudo apt-get upgrade -y kubeadm=1.18.0-00 sudo apt-mark hold kubeadm

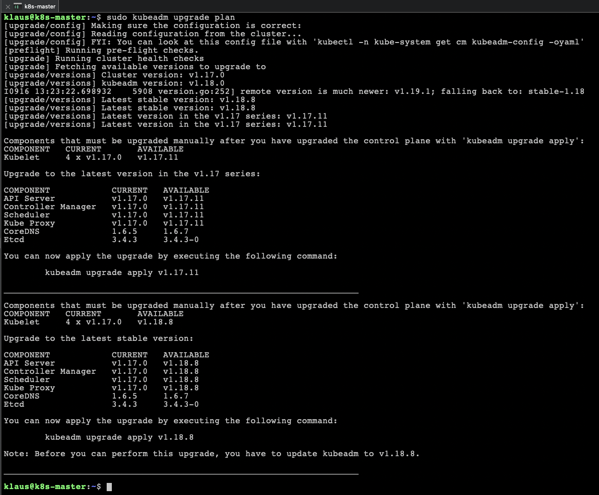

In the next step we can use the kubeadm upgrade plan command which update we can perform:

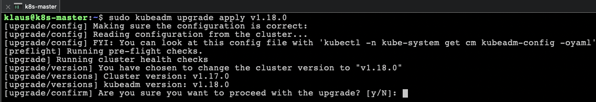

And now we perform the upgrade of the Control Plane components by running the command kubeadm upgrade apply v1.18.0:

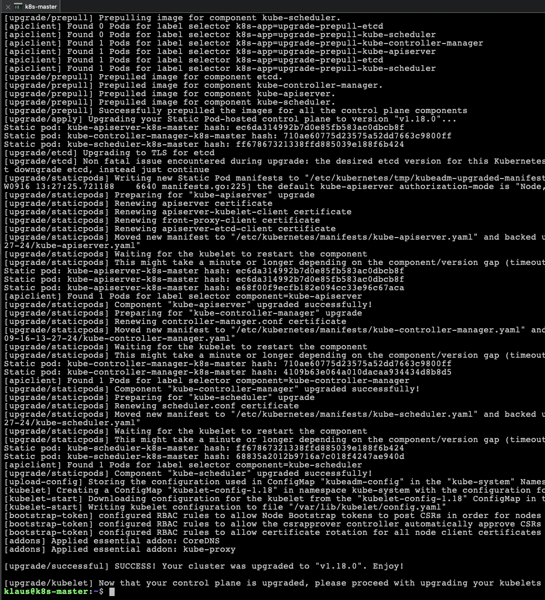

The Control Plane components of our Kubernetes Cluster are now successfully upgraded to version v1.18.0:

But when you check the version of the nodes in the cluster, you still see the old version of v1.17.0:

This makes sense, because the output of this command shows the version of the kubelet components in the cluster, and they were not yet upgraded. So, let’s upgrade now the kubelet component on the Master Node.

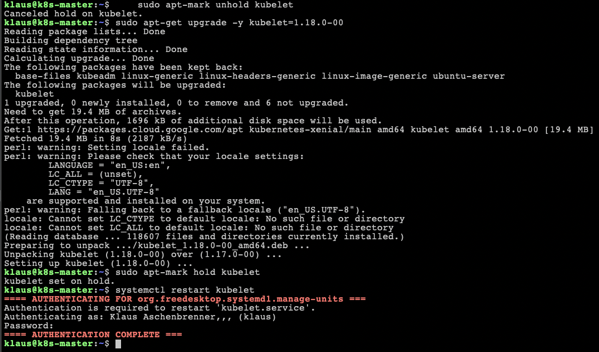

sudo apt-mark unhold kubelet sudo apt-get upgrade -y kubelet=1.18.0-00 sudo apt-mark hold kubelet systemctl restart kubelet

When you now check the version numbers of the Kubernetes Nodes again, you can see that the Master Node is now finally upgraded to version v1.18. The remaining Worker Nodes are still on version v1.17.

Let’s continue now with the upgrade of the Worker Nodes.

Upgrading the Worker Nodes

By default, all of your deployed Pods in a Kubernetes Cluster are scheduled and executed on Worker Nodes, because the Master Node has a NoSchedule taint applied during the initial installation.

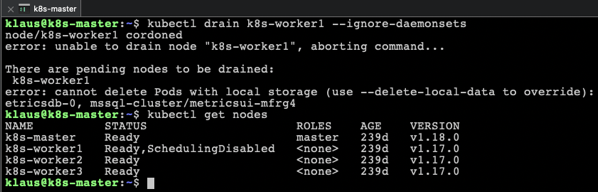

When you now upgrade a Worker Node, you also want to make sure that no Pods are currently executed on that Worker Node, and that the kube-scheduler doesn’t place new Pods on that Worker Node. You can achieve both requirements with the kubectl drain command. It evicts all Pods from that Worker Node (they are re-scheduled on the remaining Worker Nodes), and it also applies the NoSchedule taint on that Worker Node.

And then we are upgrading the kubeadm utility and the kubelet component directly on the Worker Node:

sudo apt-mark unhold kubeadm kubelet sudo apt-get upgrade -y kubeadm=1.18.0-00 kubelet=1.18.0-00 sudo kubeadm upgrade node --kubelet-version v1.18.0 sudo apt-mark hold kubelet kubeadm systemctl restart kubelet

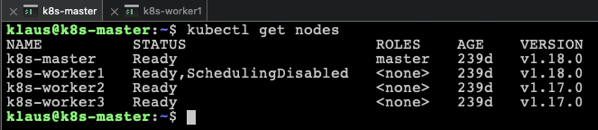

When you now check the version of the Kubernetes Nodes again, you can see that the Worker Node is now upgraded to v1.18.

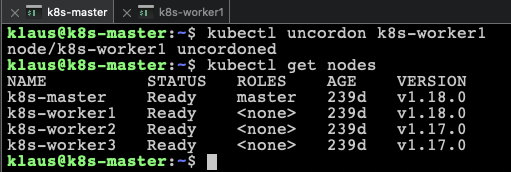

The only thing that you have to do is to remove the NoSchedule taint by running the kubectl uncordon command:

kubectl uncordon k8s-worker1

The first Worker Node is now fully upgraded to version v1.18. Now you have to apply the same upgrade steps to the remaining Worker Nodes in your Kubernetes Cluster. And in the end, you have a fully upgraded Kubernetes Cluster of version v1.18.

When you now want to upgrade to the latest Kubernetes version v1.19, you have to do everything again. As I have said in the beginning of this blog posting, you can only upgrade your Kubernetes Cluster from one minor version to the next one…

Sunmary

Upgrading a Kubernetes Cluster is not that hard. The key fact that you have to be aware of is that you have to mark the various Kubernetes components as hold with the apt-mark command, because otherwise you are getting into serious version incompatibility troubles as you have seen.

I hope that you have enjoyed this blog posting, and now I’m grabbing a coffee until my Kubernetes Cluster is finally upgraded to v1.19 🙂

Thanks for your time,

-Klaus