Over the last few weeks I have already blogged a lot about SQL Server 2019 Big Data Clusters. In today’s blog posting I want to give you an overview how you can actually work with Big Data Clusters, and what makes them so interesting to you.

Overview

The idea of a SQL Server 2019 Big Data Cluster is quite simple: Microsoft wants to combine your valuable Relational Data with your high-volume Big Data. A SQL Server 2019 Big Data Cluster stores your relational data in a traditional SQL Server Instance – called the Master Instance – and your high-volume Big Data in an Apache Hadoop Cluster.

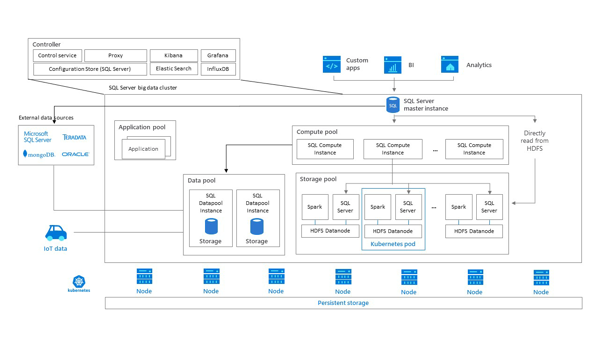

Yes, you read correctly: when you install a SQL Server 2019 Big Data Cluster, you will also get a fully configured Apache Hadoop Cluster with HDFS and Spark integration! The following picture from Books Online shows the architecture of a deployed Big Data Cluster:

Besides the whole Logging/Management infrastructure you will see here the SQL Server Master Instance, and in addition so-called Pools:

- Storage Pool

- Data Pool

- Compute Pool

- Application Pool

Let’s have a more detailed look on each of these pools.

Storage Pool

The various Kubernetes Pods of the Storage Pool represents the individual HDFS Data Nodes, which contains SQL Server on Linux, Apache Spark, and Apache HDFS. You might be asking what’ the purpose of the SQL Server Container? Normally, you don’t connect to these SQL Server Instances directly. They are used internally of the Big Data Cluster to optimize the access of the data stored in the HDFS Data Nodes.

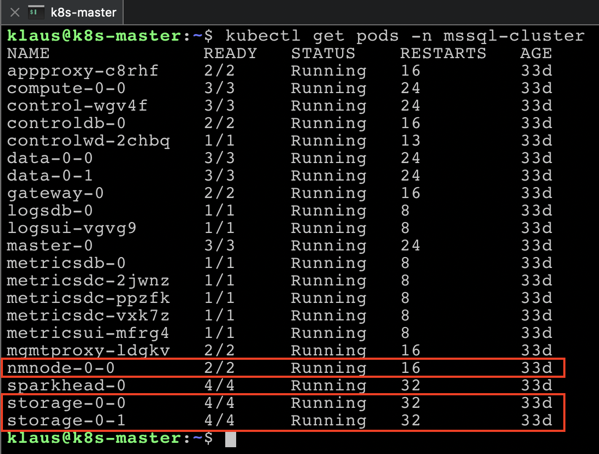

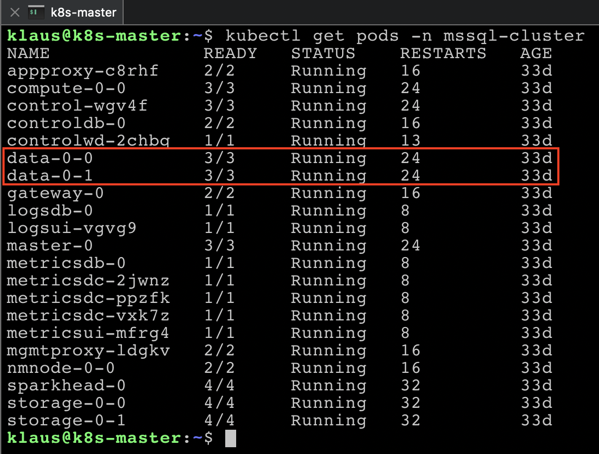

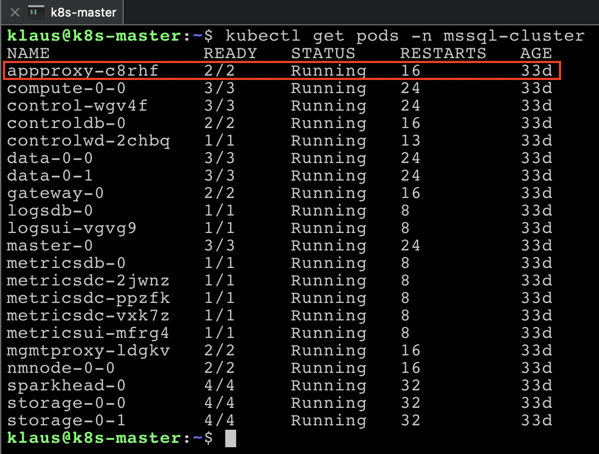

If you have a non-HA deployment of a Big Data Cluster, you will get 2 Kubernetes Pods for the Storage Pool, and one additional Pod that represents the HDFS Name Node:

Data Pool

The Data Pool of a SQL Server 2019 Big Data Cluster is also an interesting concept. The Data Pool is nothing more than a Caching Layer. The idea is that you ingest data from SQL Server queries or Apache Spark queries and that you cache that result in the Data Pool for further usage. You can use for example Power BI to visualize the ingested data for your end users.

You have again multiple Kubernetes Pods for the Data Pool to provide a better performance across a large data set. The data that is stored in the Data Pool, is internally distributed across the various Pods in a Round-Robin fashion and is stored in tables with a Clustered ColumnStore Index.

Compute Pool

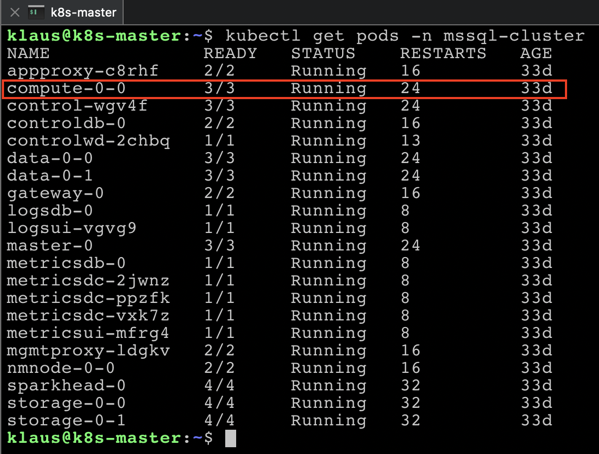

The Compute Pool is another beast in a Big Data Cluster. The Compute Pool provides you a fully configured Polybase Scale-Out Group! Yes, you have read correct: it’s a fully configured Polybase Scale-Out Group. As you know, if you have already worked with Polybase it is very hard to set up a Scale-Out Group. And with a Big Data Cluster you just get it out of the box – without any hurdles! In a non-HA deployment of a Big Data Cluster, you will get one Kubernetes Pod for the Compute Pool.

Application Pool

And finally, there is the Application Pool. The purpose of the Application Pool is to deploy applications into the Big Data Cluster to process the data that is stored in the Big Data Cluster. Currently, the supported Applications are R, Python, SSIS, and MLeap.

By now you should have a basic understanding of the architecture of a SQL Server Big Data Cluster, so let’s have now a look on how to work with a Big Data Cluster.

Working with a SQL Server 2019 Big Data Cluster

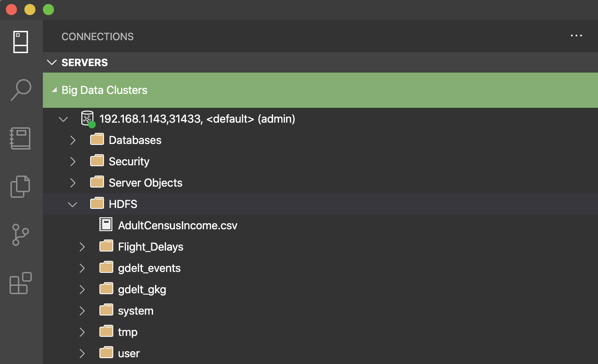

When you want to interact and work with a SQL Server 2019 Big Data Cluster, your preferred tools is the Azure Data Studio. With the Azure Data Studio, you are able to connect to the SQL Server Master Instance of your Big Data Cluster, and you are also able to manage directly your HDFS Cluster with the built-in integration:

You can upload through Azure Data Studio directly files into the HDFS Cluster, or mount an existing HDFS Cluster through HDFS Tiering into your Big Data Cluster. In my case I have uploaded the January 2020 data from the GDELT Project into the HDFS Cluster.

The GDELT Project is quite interesting, because they provide you Terabytes (!!!!) of raw data files in CSV files that you can use to process and analyze with your SQL Server 2019 Big Data Cluster. In a future blog posting I will show you how to analyze through Apache Spark that dataset and how to store the result of this analysis in the Data Pool of the SQL Server 2019 Big Data Cluster.

Summary

In this blog posting I gave you a high-level overview about the various components that are part of a SQL Server 2019 Big Data Cluster. As you have learned, there are different types of Pools in a Big Data Cluster, where each Pool has a dedicated purpose.

Thanks for your time,

-Klaus