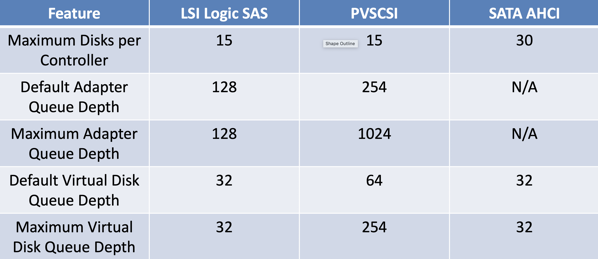

In today’s blog posting I want to talk more about the differences between the LSI Logic SAS and the VMware Paravirtual (PVSCSI) Controller that you can use in your VMware based Virtual Machines to attach VMDK files.

LSI Logic SAS Controller

If you add VMDK files to your Virtual Machine, VMware vSphere uses by default the LSI Logic SAS controller. The reason for that is that it is supported out of the box by the various Operating Systems without installing custom drivers. Therefore, you see the LSI Logic SAS controller very often used for the boot drive of the Operating System.

Unfortunately, the LSI Logic SAS controller doesn’t give you a great throughput regarding IOPS (Input/Output Operations per Second), and it also introduces a higher CPU utilization compared to the PVSCSI controller.

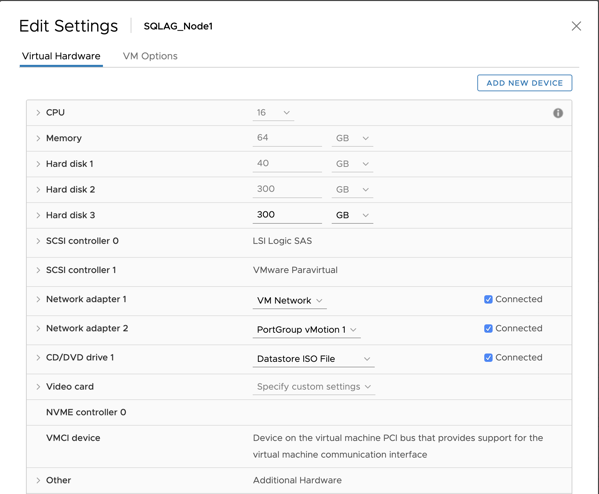

To prove that point, I have benchmarked the LSI Logic SAS and the PVSCSI controller in my own VMware vSAN based Home Lab. To generate some storage related workload against the I/O subsystem, I have used the Diskspd Utility that you can download for free from Microsoft. My test VM (which doesn’t span NUMA nodes) was configured as follows:

- 8 CPU Cores

- 64 GB RAM

- C: Drive

- LSI Logic SAS Driver

- E: Drive

- VMware Paravirtual Driver

- F: Drive

- NVMe Driver

I have used the following workload pattern with diskspd:

- Block Size: 8K

- Duration: 60 seconds

- Outstanding Requests: 8

- Worker Threads: 8

- Reads: 70%

- Writes: 30%

This translates into the following command line:

diskspd.exe -b8K -d60 -o8 -h -L -t8 -W -w30 -c2G c:\test.dat

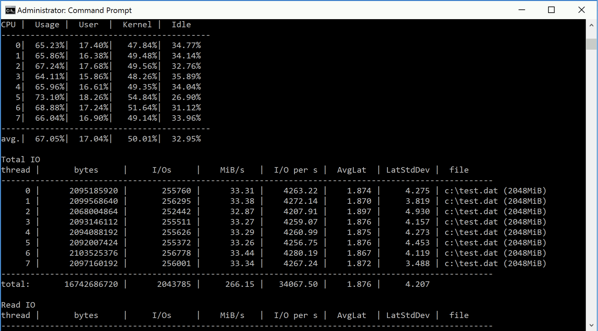

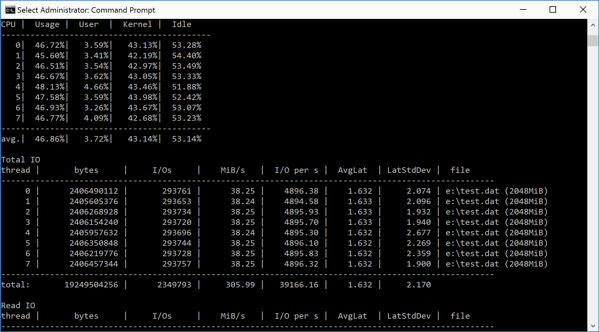

The following picture shows you the output from diskspd when executed against the C drive, which uses the LSI Logic SAS controller and where the underlying VMDK file is stored in a vSAN Datastore with a FTT (Failure to Tolerate) method set to 1:

As you can see from the picture above, I was here able to generate a little bit more than 34000 IOPS with an average latency of around 1.8 ms. But as you can see, the CPU utilization was at around 67%, which is quite high! Let’s try the same with the PVSCSI controller.

VMware Paravirtual (PVSCSI) Controller

In contrast to the LSI Logic SAS controller, the PVSCSI controller is virtualization aware and provides you a higher throughput with less CPU overhead and is therefore the preferred driver when you need the best possible storage performance. In my case I have configured the E drive of my test VM to use the PVSCSI controller. I ran here again the same Diskspd command line as previously:

diskspd.exe -b8K -d60 -o8 -h -L -t8 -W -w30 -c2G e:\test.dat

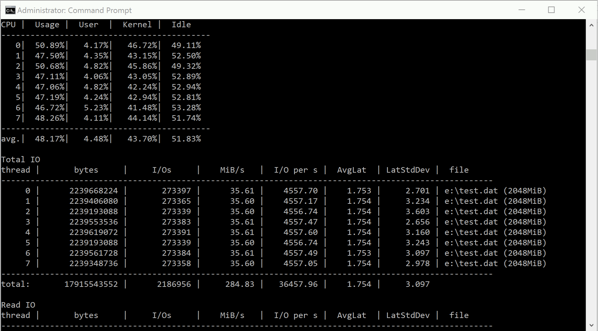

The following picture shows you the test results:

You can immediately see that I was able to generate more IOPS – namely 36457, and the average latency also went down to around 1.7ms, which is also a minor improvement. But look at the CPU utilization – it is down to only 48%! That’s a huge difference of almost 20% compared to the LSI Logic SAS driver!

But we are not yet finished here. The PVSCSI controller can be also customized within the guest operating system for a better performance. The PVSCSI controller has a Default Virtual Disk Queue Length of 64, and a Default Adapter Queue Length of 254. These properties can be increased up to 254 and 1024.

This whitepaper from VMware shows you how you can change these values. Let’s change therefore these settings to their maximum values by running the following command line within Windows:

REG ADD HKLM\SYSTEM\CurrentControlSet\services\pvscsi\Parameters\Device /v DriverParameter /t REG_SZ /d “RequestRingPages=32,MaxQueueDepth=254”

After the necessary Windows restart, I have run Diskspd again, and I got the following results:

As you can see now, we are getting now more than 39000 IOPS with an average latency of 1.6ms! The CPU utilization is almost the same as previously – it even decreased a little bit. In comparison with the default LSI Logic SAS controller we made an improvement of 6000 IOPS and we have decreased our CPU utilization by 20%. Not that bad!

NVMe Controller

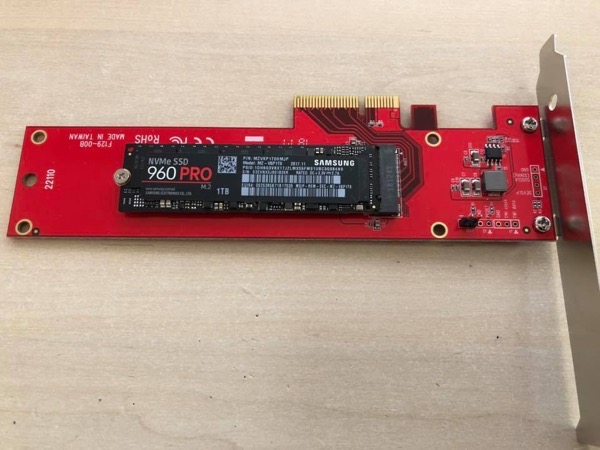

Besides my vSAN based Datastore I have also in each HP DL 380 G8 Server a dedicated NVMe based Datastore, where I use a single Samsung 960 PRO M.2 1 TB SSD.

During writing this blog posting, I thought it would be also a great idea to benchmark this amazingly fast disk in combination with the NVMe Controller that was introduced with ESXi 6.5. Therefore, I ran the following Diskspd command line against my F: drive, which is a VMDK file stored locally on the NVMe Datastore:

diskspd.exe -b8K -d60 -o8 -h -L -t8 -W -w30 -c2G f:\test.dat

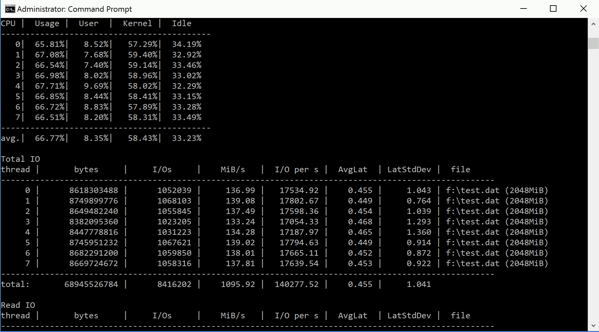

And here are the results:

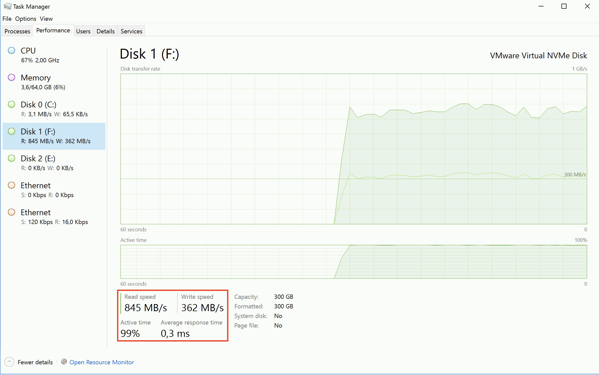

As you can see, I have achieved here more than 140000 IOPS with an average latency time of only 0.4ms!!! But on the other hand, the CPU utilization was higher as previously – it went up to around 67%. Here are also some metrics that Windows Task Manager has reported during the test run:

The combined read and write bandwidth were more than 1.2 GB per second! Who needs spinning drives anymore? 🙂

Summary

If you want to get the best possible storage performance out of your Virtual Machine, it is a prerequisite to use the PVSCSI controller for attaching the various VMDK files to your Virtual Machine. As you have seen in this blog posting, the LSI Logic SAS controller gives you much less IOPS with a higher CPU utilization.

Unfortunately, I don’t see that many VMs in the field, which are using the PVSCSI controller – unfortunately. And if the PVSCSI controller is used, it is used with the default queue lengths, which can be also a limiting factor – especially for SQL Server related workloads.

If you want to learn more about how to Design, Deploy, and Optimize SQL Server Workloads on VMware, I highly suggest to have a look on my online course which shows you in 18 hours how to get the best possible performance out of SQL Server running on top of VMware!

Thanks for your time,

-Klaus

2 thoughts on “Benchmarking the VMware LSI Logic SAS Controller against the PVSCSI Controller”

“Unfortunately, I don’t see that many VMs in the field, which are using the PVSCSI controller – unfortunately. And if the PVSCSI controller is used, it is used with the default queue lengths, which can be also a limiting factor – especially for SQL Server related workloads.”

Come and see the VMs I have under control. They are using PVSCSI with maxed queue lenghth. 😉

Thanks for the write-up, from the linked VMWare white paper and my own tests it seems that adjusting the OS queue depths up without a corresponding change in the HBA queue depths significantly reduces performance – might be worth noting for those that don’t immediately click thru to TFA.