In my last 2 blog postings I have talked about how to deploy a SQL Server Availability Group into a Kubernetes Cluster. Today I want to continue to talk about Kubernetes, but I want to show you how you can deploy a stand-alone SQL Server Instance into a Kubernetes Cluster.

Why?

Before I show you the actual steps how to deploy a single SQL Server Instance into a Kubernetes Cluster, I want to talk in the first step about the reasons – why should you deploy a single SQL Server Instance into a Kubernetes Clusters?

One of the greatest things about Kubernetes is the possibility to perform upgrades and rollouts of software components in a coordinated, reliable way. If you have SQL Server running in a Kubernetes Cluster, it is a very simple talk to update SQL Server to a newer version. If something goes wrong, Kubernetes is able to perform an automatic rollback to the older (working) version. Antony Nocentino has blogged about this possibility a few days ago.

Another big bonus point for running SQL Server in Kubernetes is the possibility to restart a pod (which hosts the SQL Server Container) in an automatic way when the pod (or the Kubernetes node) crashes. I will talk more about this possibility (and its side-effects) later in today’s blog posting.

Creating the Storage Objects

Let’s start now by deploying a stand-alone SQL Server Instance into a Kubernetes Cluster. Deploying a stateless Container into a Kubernetes Cluster is quite simple, but SQL Server – as a database – is of course stateful. Therefore, we need a way to persist the created data across pod restarts. Kubernetes provides us here the following API objects that we can use to implement persistent storage:

- Storage Classes

- Persistent Volume Claims

- Persistent Volumes

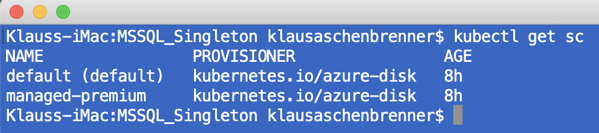

Let’s have a more detailed look on these various API objects. First of all, you need a Storage Class. A Kubernetes administrator can describe with a Storage Class the different types of Storage Tiers that the Kubernetes Cluster offers. When you deploy a Kubernetes Cluster in Azure Kubernetes Services, you will get out of the box 2 predefined Storage Classes:

- default (uses traditional HDD Storage)

- managed-premium (uses fast SSD Storage)

You can retrieve the available Storage Classes with the following command:

kubectl get sc

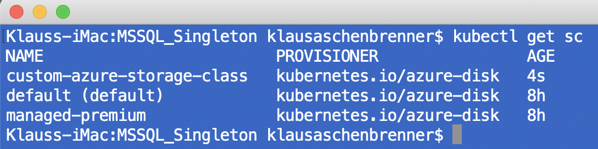

In our case I want to create a new Storage Class with a few specific additional parameters. The following listing shows the YAML file that describes a custom Storage Class for Kubernetes.

kind: StorageClass apiVersion: storage.k8s.io/v1beta1 metadata: name: custom-azure-storage-class provisioner: kubernetes.io/azure-disk volumeBindingMode: WaitForFirstConsumer reclaimPolicy: Retain parameters: kind: Managed

You can deploy this custom Storage Class with the following command into your Kubernetes Cluster:

kubectl apply -f 1_sc.yaml

When you afterward check again the available Storage Classes, you can see the newly created one:

After we have created our custom Storage Class, we need to create a so-called Persistent Volume Claim. With a Persistent Volume Claim a Pod can request some persistent storage for a given Storage Class. The following YAML file show the definition of our Persistent Volume Claim, where we request 5 GB of storage from the previous created Storage Class custom-azure-storage-class.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc-mssql

spec:

accessModes:

- ReadWriteOnce

storageClassName: custom-azure-storage-class

resources:

requests:

storage: 5Gi

You can deploy this Persistent Volume Claim with the following command into your Kubernetes Cluster.

kubectl apply -f 2_pvc.yaml

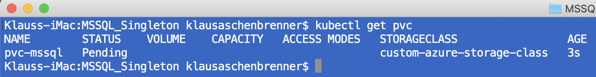

After the deployment of the Persistent Volume Claim, you can use the following command to show all available Persistent Volume Claims:

kubectl get pvc

The requested persistent volume is not yet created, because the used Storage Class has specified the volumeBindingMode with WaitForFirstConsumer. Therefore, the persistent volume is created when the first Pod requests it. That’s the reason why you see in the previous screenshot the status of the Persistent Volume Claims as Pending.

Deploying a SQL Server Instance into the Kubernetes Cluster

By now we have created the necessary storage infrastructure in our Kubernetes Cluster. The next step is now to deploy SQL Server itself into our Kubernetes Cluster. The following YAML file shows the necessary definition of the ReplicaSet that we create to be able to deploy SQL Server.

apiVersion: extensions/v1beta1

kind: ReplicaSet

metadata:

name: mssql

labels:

app: mssql

spec:

replicas: 1

selector:

matchLabels:

app: mssql

template:

metadata:

labels:

app: mssql

spec:

containers:

- name: mssql

image: mcr.microsoft.com/mssql/server:2019-CTP2.3-ubuntu

resources:

requests:

cpu: 1

memory: 4Gi

env:

- name: ACCEPT_EULA

value: "Y"

- name: SA_PASSWORD

value: "Passw0rd1"

ports:

- containerPort: 1433

volumeMounts:

- name: mssql

mountPath: /var/opt/mssql

volumes:

- name: mssql

persistentVolumeClaim:

claimName: pvc-mssql

As you can see from the YAML file, we are requesting here 1 replica (one running instance of SQL Server) of the SQL Server 2019 CTP 2.3 Ubuntu Docker Image. 2 very important things here are the entries volumeMounts and volumes. With these 2 entries we are mounting the 5 GB persistent volume into the folder /var/opt/mssql of our SQL Server Docker Container. And as you can see here, we are referencing the persistent volume here through the previous created Persistent Volume Claim.

Everything else is straightforward in that YAML file: we specify the necessary environment variables and the Container Port 1433 that is used for the communication with the SQL Server service itself. You can deploy that YAML file with the following command into your Kubernetes Cluster:

kubectl apply -f 3_sqlserver.yaml

As soon as you have executed this command, a lot of different things are happening in your Kubernetes Cluster:

- A new pod gets instantiated by Kubernetes

- The pod requests the Persistent Volume

- The pod pulls the Docker Image from the Microsoft Container Registry

- The pod starts the Docker Image

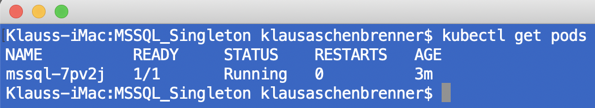

You can see the deployed pod with the following command:

kubectl get pods

If you want to see all the previous mentioned steps that happened during the creation of the pod, you can use the following command (you just have to specify the correct name of your pod):

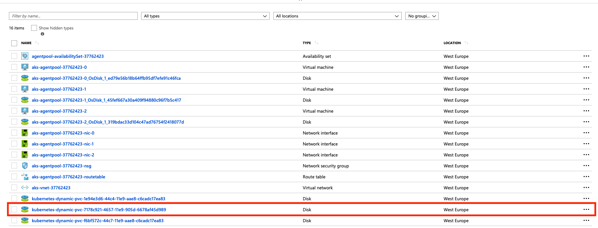

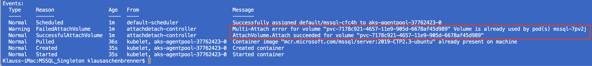

As you can see from the screenshot, a persistent volume was attached to the created pod. You can also see that volume with the following command:

kubectl get pv

![]()

If you look into the Resource Group of your Kubernetes Cluster in the Azure Portal, you can also see the underlying disk that was created for this Persistent Volume.

Accessing the SQL Server Instance

By now we have a running and working SQL Server Instance deployed to a Kubernetes Cluster. But how can you access this SQL Server? Currently you don’t have any public IP address on which SQL Server is listening for incoming requests. Therefore we have to deploy a so-called Service into our Kubernetes Cluster. The following listing shows a YAML file that describes a Service API object with which we are able to access SQL Server from the outside.

apiVersion: v1

kind: Service

metadata:

name: mssql

spec:

ports:

- port: 1433

protocol: TCP

targetPort: 1433

selector:

app: mssql

type: LoadBalancer

Let’s deploy this Service with the following command:

kubectl apply -f 4_sqlserver-service.yaml

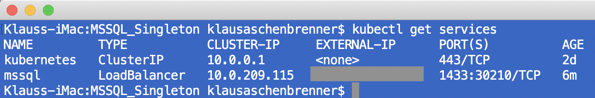

It takes now a few minutes, and afterward Azure Kubernetes Services has allocated a public IP address to that Kubernetes Service with which we can finally access SQL Server. You can retrieve that IP address with the following command:

kubectl get services

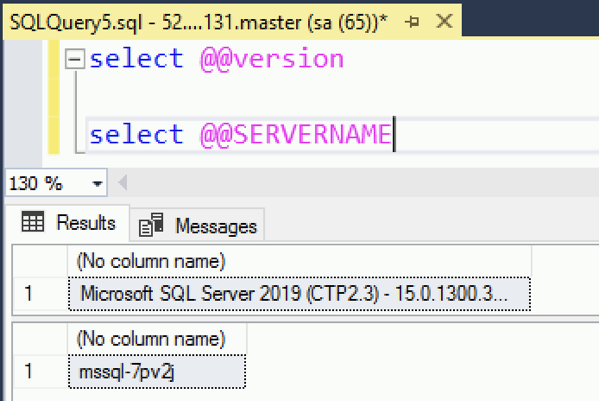

When you take that IP address and use it to connect to SQL Server, you can see that the SQL Server Docker Container is running on our pod – in the Kubernetes Cluster!

Single Pod High Availability!?

In the beginning of this blog posting I have talked about the various advantages running a single instance of SQL Server in a Kubernetes Cluster. One of the advantages is that Kubernetes always makes sure through the ReplicaSet that the requested amount of pods are always online, healthy, and available. In our case we have requested in the ReplicaSet 1 pod, therefore 1 pod will be always available.

Kubernetes uses here internally so-called Reconciliation Loops, which are comparing the Actual State with the Desired State of the Kubernetes Cluster. The Desired State is expressed through the various YAML files in a declarative way. Therefore, when a pod crashes, Kubernetes will detect a difference between the Actual and Desired State and will schedule a new pod in the Kubernetes Cluster. Let’s try that, and delete our one and only pod that is currently running SQL Server:

kubectl delete pod mssql-7pv2j

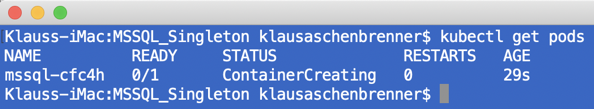

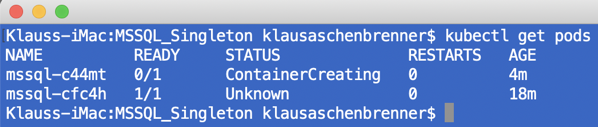

Our pod is gone! If you check the number of pods again, you can see that Kubernetes itself has triggered the creation of a new pod:

And after some time that pod will be again in the Running state. The great thing about that is now that the Persistent Volume of the old pod was attached to new pod, and therefore we haven’t lost any data! If you have previously created a new SQL Server database, that database will be still accessible with the new pod. That’s the idea of Persistent Storage in Kubernetes. You can also see with the following command that the Persistent Volume was reattached to our pod:

kubectl describe pod mssql-cfc4h

So far, so good. In our case we have deleted the pod in an imperative way. But what happens if we completely shut down the VM that currently hosts the Kubernetes node on which our pod runs? In that case Kubernetes will also detect the difference between the Desired and Actual State and will schedule a new pod on a different Kubernetes node. Let’s try that by just shutting down the correct VM through the Azure Portal.

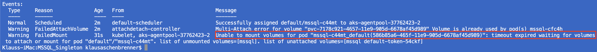

And now really terrible things are happening: the pod on the old Kubernetes node has crashed, and Azure Kubernetes Services tries now to schedule a new pod on a different Kubernetes node. But the big problem is now that Azure Kubernetes Services is NOT able to reattach the Persistent Volume to the newly created pod, because it is still attached to the old (unavailable) pod!!! Ouch…

More or less we have now crashed our whole Kubernetes deployment! It seems that attaching a Persistent Volume to a new pod is currently not really supported/implemented in Azure Kubernetes Services, when that volume is already attached to another pod. Bad when that pod is not available anymore.

If you check your pods, you can see that the old one is in the Unknown state, and the new one is forever in the ContainerCreating state.

To be honest: that screws up everything! The idea behind Kubernetes is a self-healing system which constantly monitors through the various Reconciliation Loops the Desired and Actual State. With that behavior Azure Kubernetes Services violates that principle.

Summary

I really like the idea behind Kubernetes, and that Microsoft tries everything to be able to run SQL Server in Kubernetes. But the more and more I dig into the internals of the Kubernetes Integration in SQL Server, the more and more I’m scared. We are currently getting a CTP 2.3 SQL Server 2019 version from Microsoft, and SQL Server 2019 should be available in an RTM version later this year.

But to be honest: the whole Kubernetes integration doesn’t really work currently. I have already blogged about the various problems that you have with Availability Groups, and today I have shown you that even a simple Standalone SQL Server Instance can’t be run in a reliable and high available way in Kubernetes. Currently it is so easy to just crash everything…

I also have to thank Andrew Pruski and Anthony Nocentino, who helped me to understand this bug, and to confirm it. Thanks guys! And there is also another blog posting, which describes some other limitations of Azure Kubernetes Services that you have to face currently…

If you want to learn more about SQL Server on Linux, Docker, and Kubernetes, I highly suggest my upcoming Live Online Training on May 13 and May 14, where I will do a more technical deep-dive about all these exiting new technologies that will change our life as SQL Server Developers and DBAs over the next years.

Thanks for your time,

-Klaus

3 thoughts on “Deploying a stand-alone SQL Server Instance into a Kubernetes Cluster”

Shouldn’t the server not be controlled by the StatefulSet instead of the ReplicaSet?

Interesting information. Thanks for sharing.

Hi,

I tried your yaml files, but seems the pods aren’t attached to PV