I’m a big believer in the SDDC (Software Defined Data Center), especially on the VMware platform. VMware provides you for a SDDC the following products/offerings:

- VMware ESXi Hypervisor for CPU and Memory Virtualization

- VMware vSAN for Storage Virtualization

- VMware NSX-T for Network Virtualization

As you already know from my previous blog postings, I’m also a huge fan of vSAN, which I’m using at my Home Lab that I have deployed a year ago. Because I had really no idea and hands-on experience with NSX-T, I thought that it was time to change that. Therefore I want to give you in this blog posting an overview how you can install VMware NSX-T in your own environment.

If you want to get an overview about the benefits of Network Virtualization and how NSX-T can help you to achieve these goals, I highly recommend to watch the following Youtube videos as an general introduction:

After watching at least the 30 minutes of the NSX Component Overview video you should have a general idea about the NSX-T architecture and how the various components that you have to deploy fit into that architecture.

In my case I will deploy the NSX-T Manager, the NSX-T Controllers and NSX-T Edges physically on my ESXi Hosts in Home Lab, and I will use the 7 Nested ESXi Hosts (that I have prepared in a previous blog posting) as Transport Nodes within my NSX-T deployment.

Deploying the NSX-T Manager

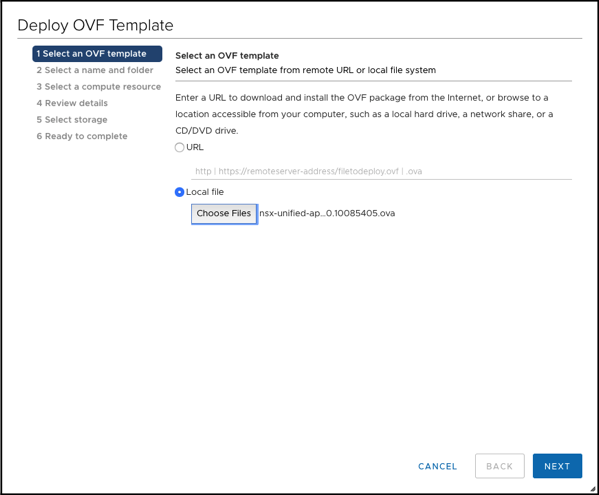

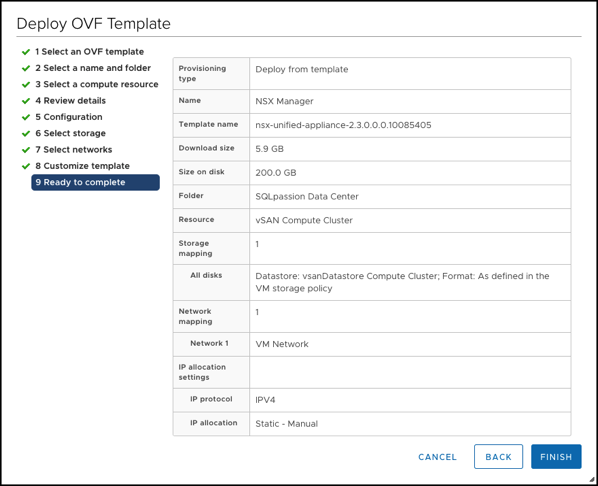

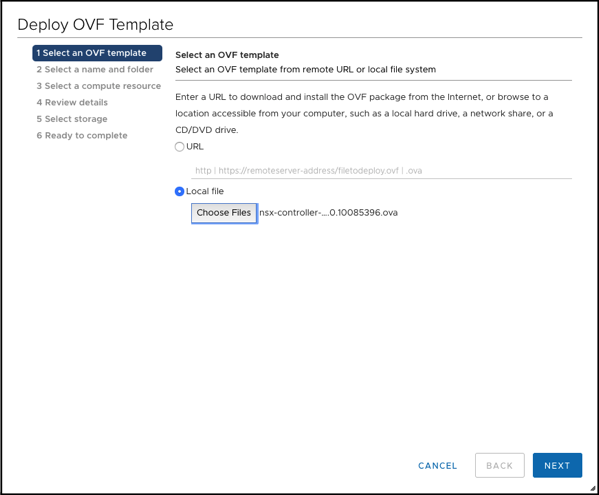

So let’s start now by deploying the NSX-T Manager. The NSX-T Manager acts as the Management Plane for NSX-T. The NSX-T Manager is provided as an OVF Template File, which can be deployed into your vSphere environment with a few simple steps.

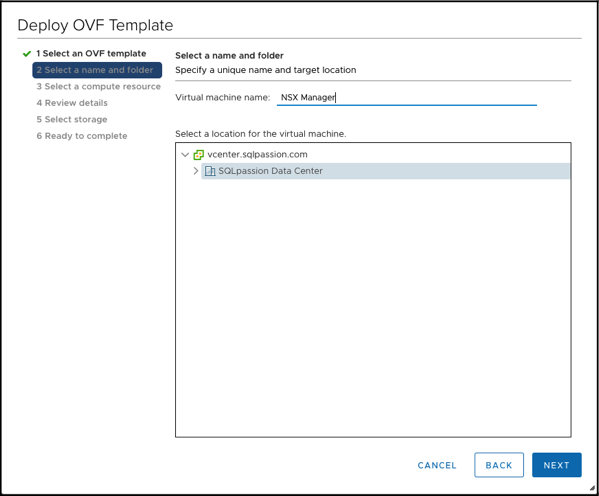

I gave the Virtual Machine the name NSX Manager:

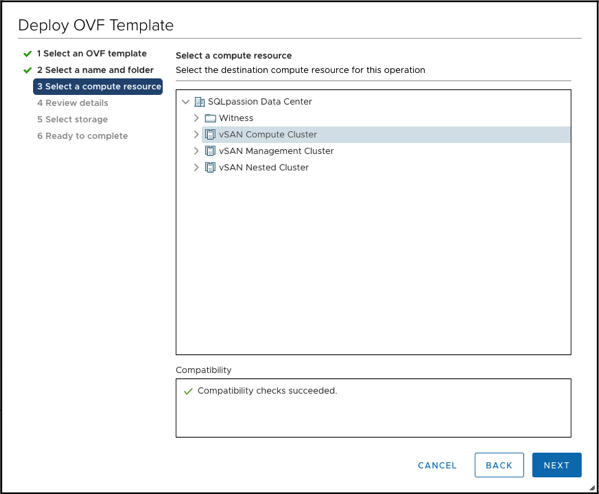

The VM for the NSX Manager is deployed into my vSAN Compute Cluster.

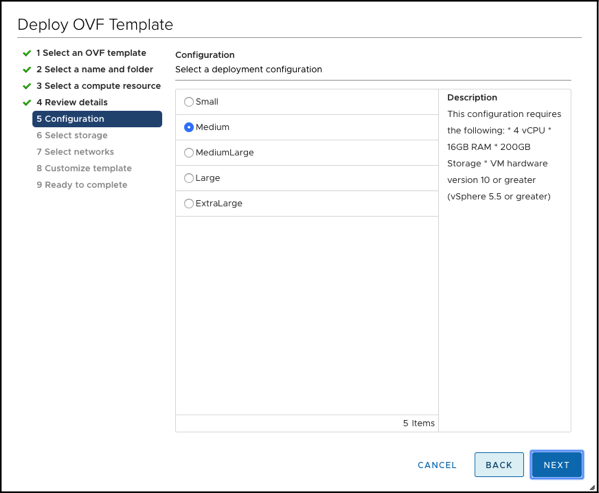

In my case I have deployed the NSX-T Manager with a Small Deployment Configuration that allocates the following resources to the VM:

- 4 vCPUs

- 16 GB RAM

- 200 GB Storage

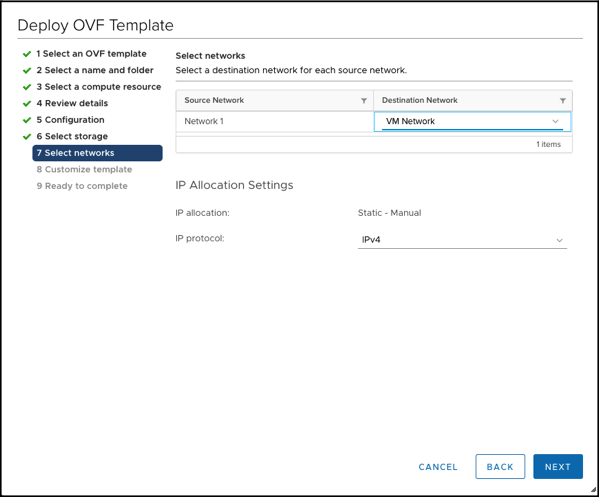

I have attached the NSX-T Manager VM to my VM Network on the 192.168.1.0/24 subnet.

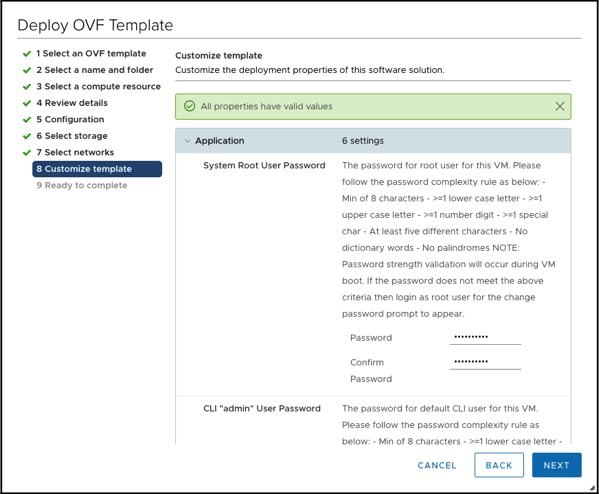

As a next step you have to configure the passwords for the various users (root, admin, audit).

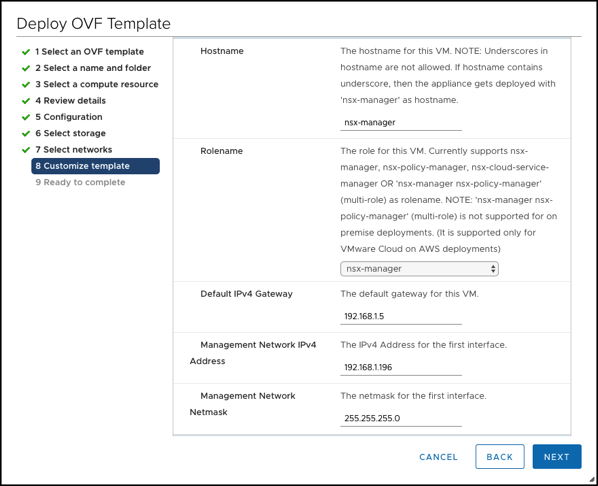

And finally you have to configure the network configuration of the NSX-T Manager VM. I have chosen here the following settings:

- Default Gateway: 192.168.1.5 (which is a physical Router in my Home Lab)

- IP Address: 192.168.1.196

- Management Network Netmask: 255.255.255.0

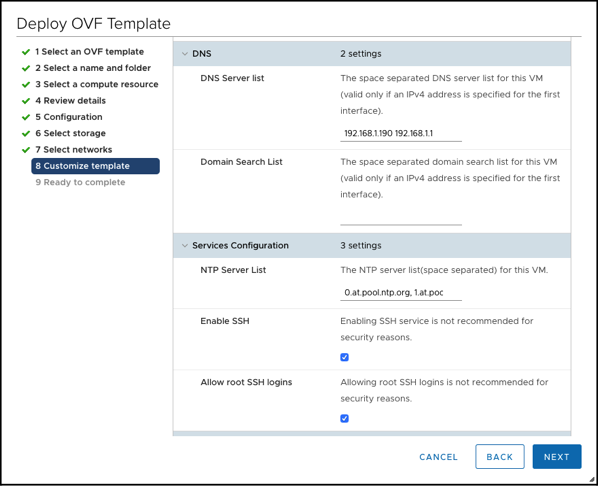

- DNS Server List: 192.168.1.190 192.168.1.1

And after you have acknowledged everything the deployment of the NSX-T Manager VMs starts…

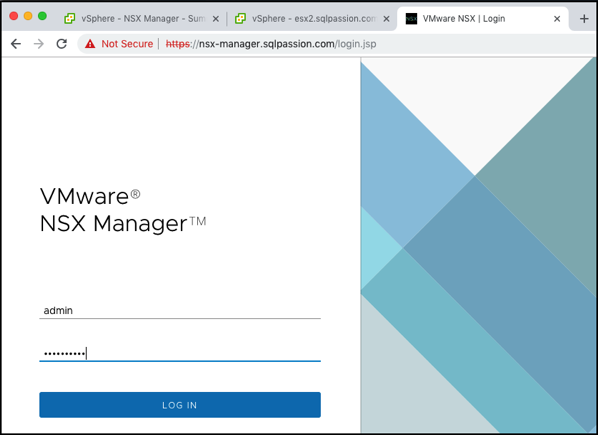

After the deployment, your NSX-T Manager is reachable through your network, and you are able to log into it.

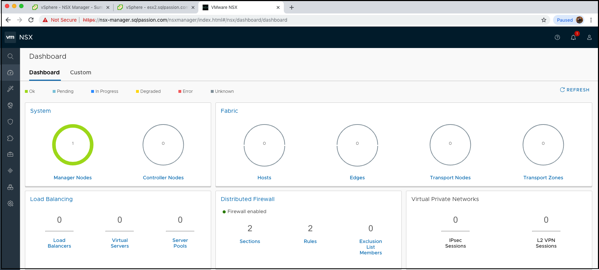

One of the most important things within the NSX-T Manager is the Dashboard. In my case only the Manager Nodes are green, because I haven’t yet deployed Controller Nodes and the whole Fabric is currently just unconfigured…

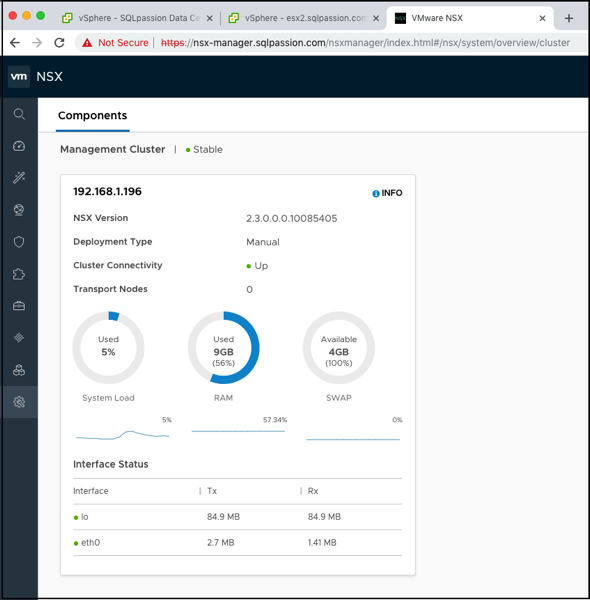

When you look at the various components, you can also see that only the NSX-T Manager is deployed.

Deploying the NSX-T Controllers

The next step in your NSX-T deployment are now the various NSX-T Controllers that will finally join into a Control Cluster. And that Control Cluster acts as the Control Plane within your NSX-T environment.

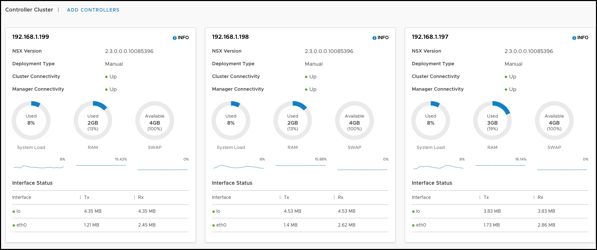

To have a fully working, redundant NSX-T Control Cluster, you have to deploy at least 3 NSX-T Controllers. Therefore I have also deployed 3 individual NSX-T Controller VMs. The NSX-T Controller is also provided as an OVF Template that you can deploy into your vSphere environment.

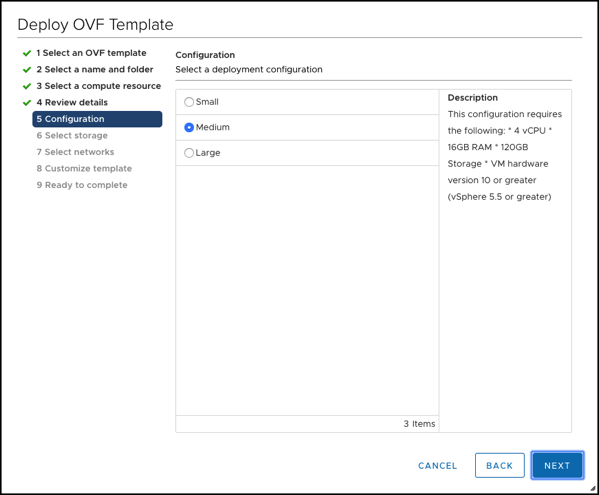

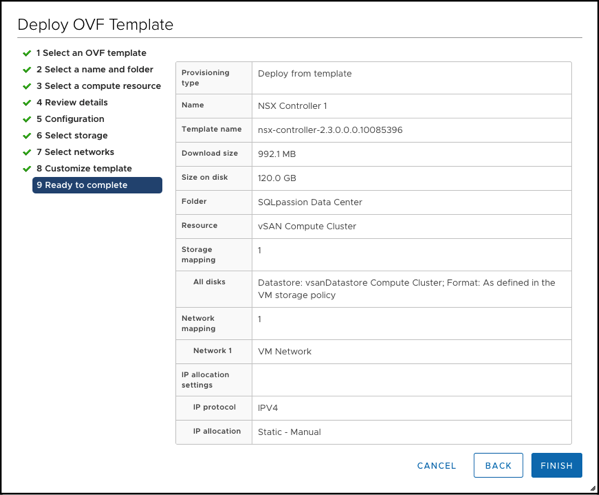

I have used for the NSX-T Controller VMs the Medium Deployment Configuration that allocates the following resources to the VM:

- 4 vCPUs

- 16 GB RAM

- 120 GB Storage

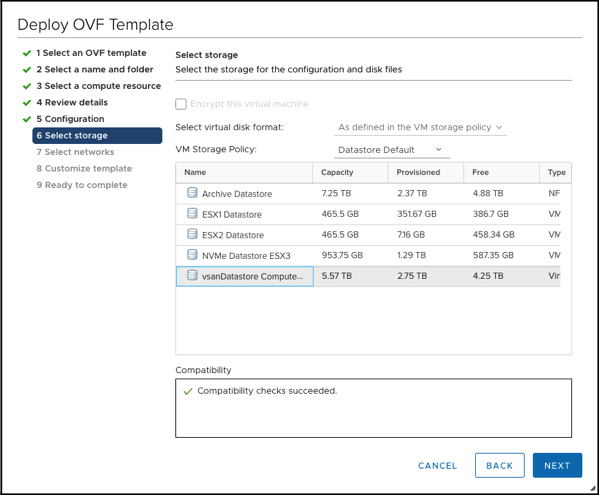

And the NSX-T Controller VMs are also stored in my vSAN Datastore of the physica Compute Cluster.

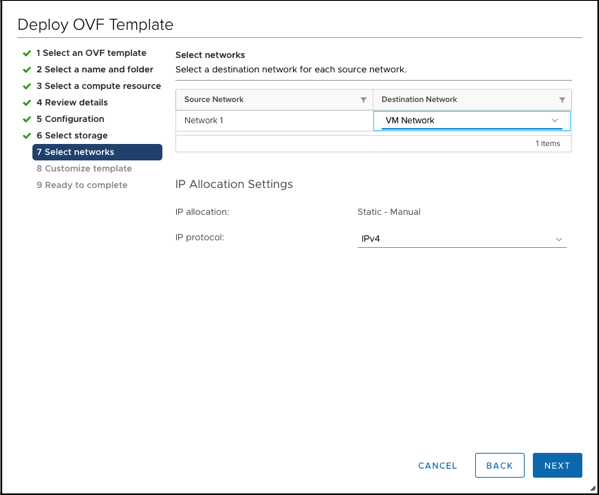

The NSX-Controller VMs are also attached to the VM Network so that they can communicate with the NSX-T Manager.

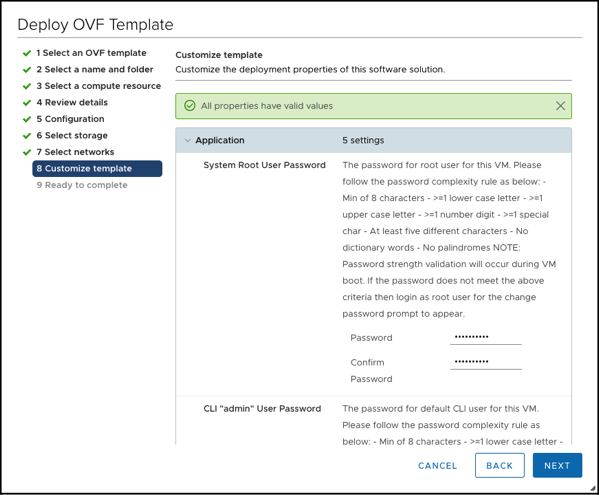

Afterwards you have to configure the passwords for the various accounts (root, admin, audit).

And finally we have to configure the network settings.

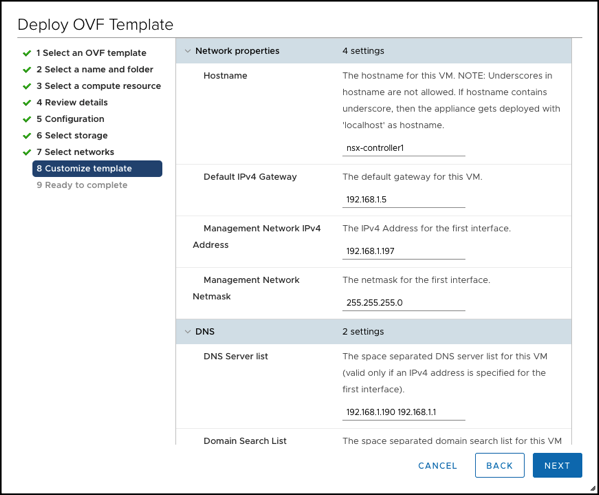

I have used here the following configuration for my 3 NSX-T Controller VMs:

- NSX-T Controller 1

- Default Gateway: 192.168.1.5

- IP Address: 192.168.1.197

- Management Network Netmask: 255.255.255.0

- DNS Server List: 192.168.1.190 192.168.1.1

- NSX-T Controller 2

- Default Gateway: 192.168.1.5

- IP Address: 192.168.1.198

- Management Network Netmask: 255.255.255.0

- DNS Server List: 192.168.1.190 192.168.1.1

- NSX-T Controller 3

- Default Gateway: 192.168.1.5

- IP Address: 192.168.1.199

- Management Network Netmask: 255.255.255.0

- DNS Server List: 192.168.1.190 192.168.1.1

And finally let’s acknowledge our configuration and start the deployment of the NSX-T Controller VMs:

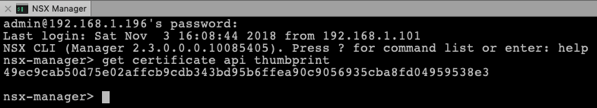

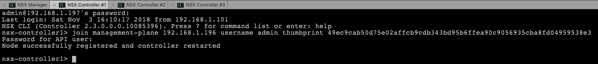

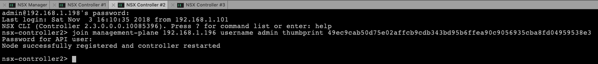

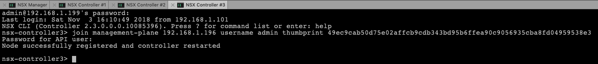

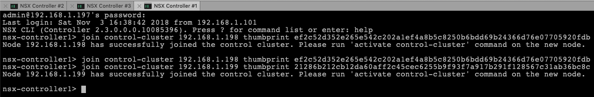

Join the NSX-T Controllers with the NSX-T Manager

By now you should have a working NSX-T Manager VM and 3 NSX-T Controller VMs. Therefore you have now to join in the next step the Controller VMs with the Manager VM. These configuration changes have to be done now through the SSH Shell of the individual VMs. In the first step you have to retrieve from the NSX-T Manager the Certificate API Thumbprint. You can get that one with the following command:

get certificate api thumbprint

The returned thumbprint is now needed to join the Controller VMs with the Manager VM. You can use here the following command:

join management-plane 192.168.1.196 username admin thumbprint …

I have executed this command on each NSX-T Controller VM and have specified the thumbprint retrieved earlier from the NSX-T Manager VM.

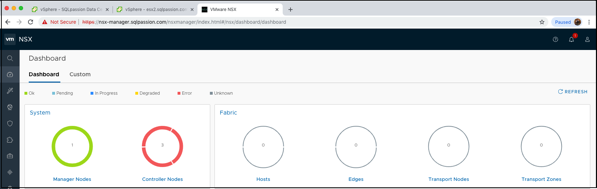

When you have afterwards a look at the Dashboard within the NSX-T Manager, you can see that you have now 3 attached Controllers. But the Controllers are still in an error state, because we haven’t yet configured the Controller Cluster.

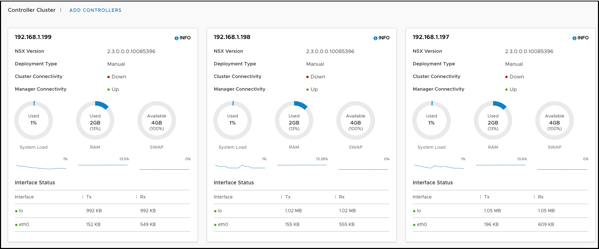

You can also verify within the NSX-T Manager that the Cluster Connectivity is currently down, because it’s not yet configured:

Configure the NSX-T Controller Cluster

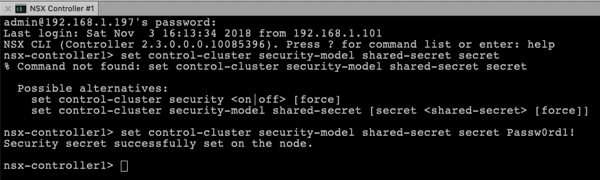

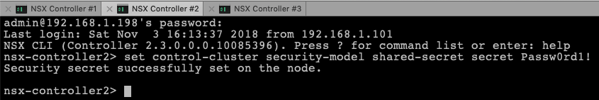

Let’s fix now that problem by configuring the NSX-T Controller Cluster. All the necessary steps have to be done again through the SSH Shell. To be able to create the Controller Cluster you have to setup a shared secret. This has to be done on the NSX-T Manager with the following command line:

set control-cluster security-model shared-secret secret Passw0rd1!

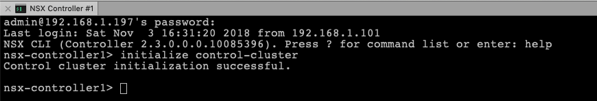

And afterwards you have to initialize the Controller Cluster with the following command on the first node of your Controller Cluster:

initialize control-cluster

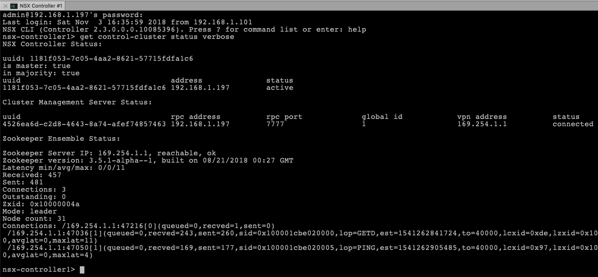

If you have initialized the first node of the NSX-T Control Cluster, you can check its status through the following command:

get control-cluster status verbose

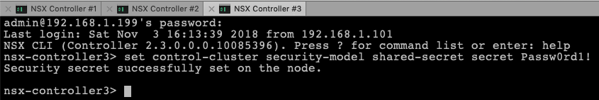

In the next step you have to set again the Security Model on the 2 remaining Cluster Nodes, and you have to provide the same shared secret as on the first Cluster Node.

set control-cluster security-model shared-secret secret Passw0rd1!

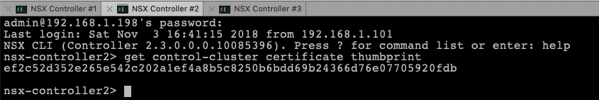

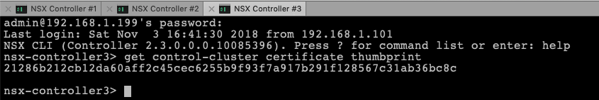

Then you have to retrieve on the 2 remaining Cluster Nodes the Certificate Thumbprint through the following command:

get control-cluster certificate thumbprint

And finally you are able to join the 2 remaining Cluster Nodes into the Controller Cluster by executing the following 2 commands on the first Cluster Node:

join control-cluster 192.168.1.198 thumbprint …

join control-cluster 192.168.1.199 thumbprint …

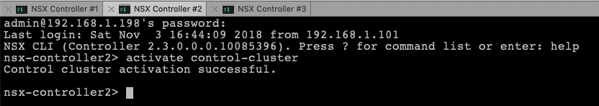

And to finish the configuration of the Controller Cluster, you have to execute the following command on the 2 Cluster Nodes that have joined the Controller Cluster:

activate control-cluster

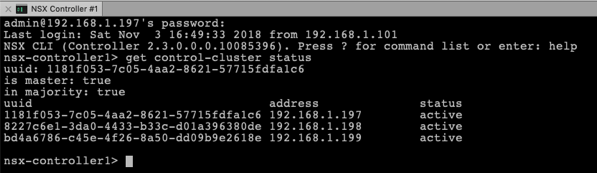

If you now run the following command on the first Cluster Node, you should be able now to verify the functionality of your Controller Cluster:

get control-cluster status

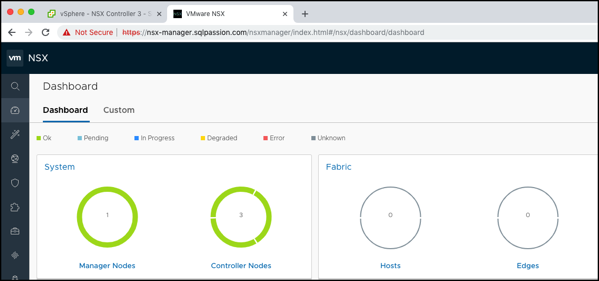

If you now check again the Dashboard within the NSX-T Manager, everything should be now green:

Next Steps

By now you have a fully functional working NSX-T deployment, and you are able to create your first Overlay Network and configure your Virtual Networking topology. To dig more into these additional steps I highly recommend to read the following 3 blog postings from Cormag Hogan:

- Building a simple ESXi Host Overlay Network with NSX-T

- First Steps with NSX-T Edge – DHCP Server

- Next Steps with NSX-T Edge – Routing and BGP

Based on these 3 blog postings I was able to configure my first virtual network and hook it up with my physical network. Happy networking and thanks for your time!

-Klaus