As you might know, I have already a quite powerful mobile Home Data Center that I have built in November 2016. I’m primarily using that Lab environment to showcase SQL Server environments through my various SQL Server workshops that I offer.

But over the last year I was also more and more involved with virtualization technologies, and specially with VMware ESXi and their Software Defined Storage solution called vSAN. Almost every time when I’m doing SQL Server consulting engagements, the whole SQL Server infrastructure is virtualized – and almost all the time the customers are using VMware technologies. And therefore I had in the last few months the goal to build a VMware vSAN powered Home Lab, and to make it as real and powerful as possible. And now it’s finally the time to share the results, and the approach that I have taken, with you.

Home Lab Overview

When we have moved in our new built house 11 years ago in Vienna, I always had the vision to have my own powerful Home Lab, with which I can run serious SQL Server workloads, and play around with latest hardware technologies. Therefore I have bought in the year 2007 a rack with 26 rack units. Back in 2007 that rack was equipped with a cheap Netgear switch, and a router for the internet connection. Each room in our house is wired with a 1 GBit network connection to the Netgear switch (through a Patch Panel) in the rack in the basement. So the rack with the 26 rack units was just overprovisioned…

But back in the summer of 2017 I came back to my original idea about a serious Home Lab deployment, and I started thinking about how I can achieve my goals:

- I want to have real x86 servers, which can be fitted with rails into the rack. So no Desktop Tower PCs.

- All servers should be wired up with a 10 GBit Ethernet connection to make the communication between them as fast as possible. A 10 GBit connection would give me a throughput of around 1.2 GB per seconds.

- I want to deploy a whole SDDC (Software Defined Data Center) environment (Computing, Storage, and Networking is virtualized), where no slow, expensive shared storage is involved, because that would be over my budget.

- I want to have as much fun as possible 😉

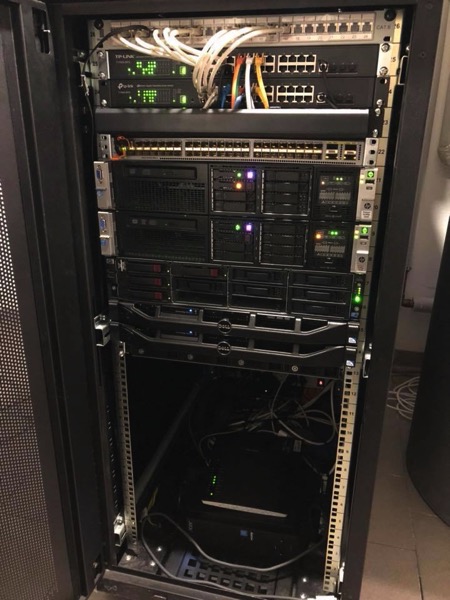

Based on these requirements, I decided to buy a few real (refurbished) x86 servers, and start building my Home Lab. As of today, my Home Lab looks like the following:

As you can see from this picture, I’m using now 14 rack units for my whole Home Lab deployment – 12 rack units (at the bottom) are (currently) empty… The whole rack can be divided into 3 logical units:

- Networking Stack

- Management Stack

- Compute Stack

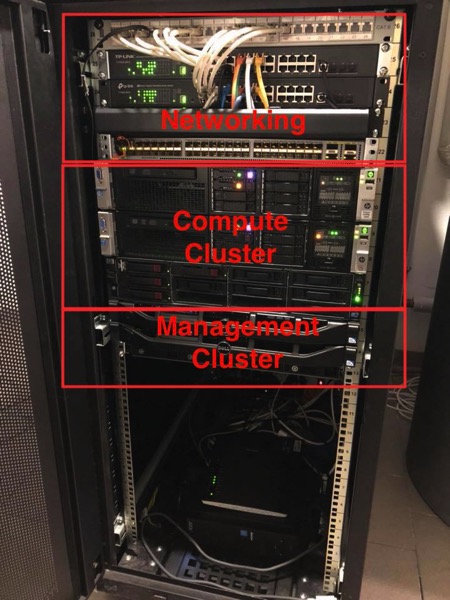

The following picture shows these 3 logical units:

Networking Stack

As I have stated previously in my requirements, I wanted to have a scalable 10 GBit Ethernet to make the network connection between the individual servers as fast as possible. Back in the year 2007 I had bought a Netgear switch with some 1 GBit ports. Over the years that switch was replaced with a TP Link T1700G-28TQ switch, which offers 24x 1 GBit ports, and additionally 4x 10 GBit ports.

In theory one of these switches would have been enough, because I just wanted to have 3-node Compute Cluster based on VMware vSAN. But as soon as you think more about it, you have no further expansion possibilities with only 4x 10GBit ports. And to be serious: a TP Link is not a real Data Center switch 😉

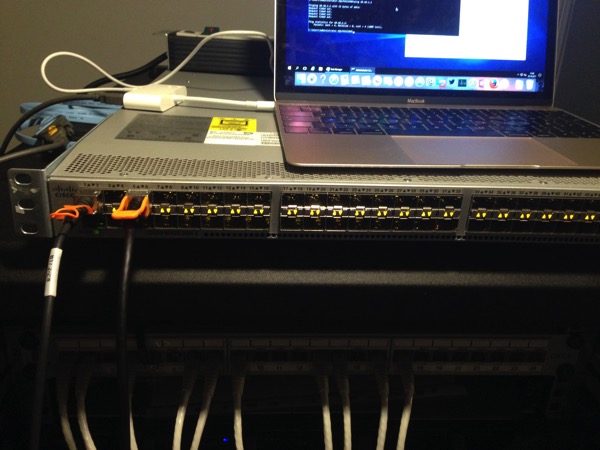

Therefore I have talked to my friend David Klee from the US, and he suggested me a Cisco Nexus 3064 switch, mainly for 2 reasons:

- They can be bought quite cheaply on EBay

- They have 48x (!) 10GBit ports with 4 additional 40GBit (!) ports (based on SFP+ and QSFP+ connections)

And there we go – here is my first real Cisco switch:

Over the last few weeks I have now also decided to buy an additional TP Link switch, because I was running out of 1 GBit ports – 24 ports are not that much as you will see later when I talk about the physical networking design. And with 2 switches I can also do a redundant network wiring for my 1 GBit network connections. When we zoom into the rack, you can see that I’m using the top 5 rack units for networking:

- Rack Unit #26: Patch Panel for the 1 GBit connections from the various rooms in our house

- Rack Unit #25: The 1st TP Link switch

- Rack Unit #24: The 2nd TP Link switch

- Rack Unit #23: Empty. I’m using that empty slot to route the network cables from the back of the rack to the switch ports on the front

- Rack Unit #22: The Cisco Nexus 3064 switch

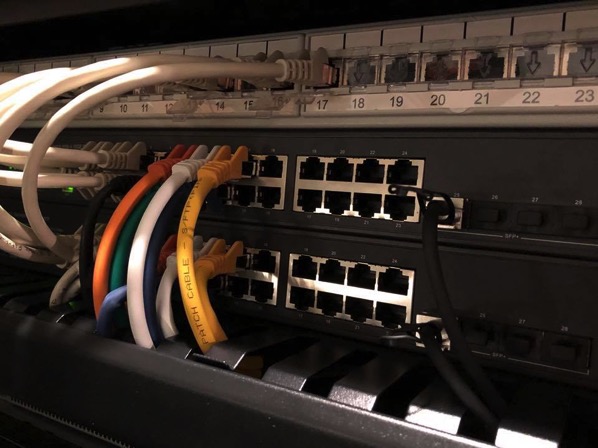

From each server I have a redundant management network connection to each of the TP Link switches. Each network wire is also color coded, as you can see from the following pictures.

Management Stack

Initially my idea was to have a simple vSAN powered Compute Cluster, where I also run my management VMs like the Domain Controller and the VMware vSphere Appliance. But this approach was not quite good regarding the power consumption. And therefore I have introduced a lightweight Management Stack in my Home Lab. The management stack consists of 2 Dell R210 II servers, which are running 4 Management VMs (spread across the 2 servers):

- VCSA Appliance for VMware vSphere

- Windows Based Domain Controller, DNS Server, and VPN Server

- VMware Log Insight

- vRealize Operations Manager

I had bought these 2 Dell servers already several years ago, but I haven’t really used them. Each server uses the following hardware:

- 32 GB RAM (more is not possible)

- A HDD based Boot Drive (it came with the server)

- 1x 512 GB Samsung EVO SSD

- 1x 1 TB Samsung EVO SSD

- 1 Mellanox NIC for 10GBit Connection

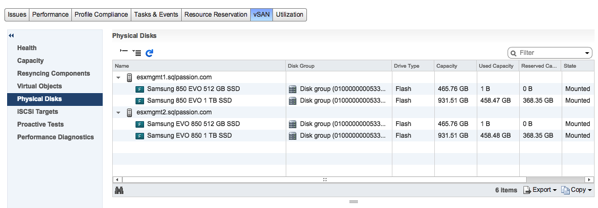

Based on that hardware I was able to create a 2-node VMware vSAN powered Cluster with a Direct Connect 10GBit Ethernet connection. This was a nice proof-of-concept deployment, because you don’t need here an expensive 10GBit network switch, if you would deploy that in a ROBO scenario. The 512 GB SSD is used for the Caching Tier, and the 1 TB SSD is used for the Capacity Tier. The following picture gives you an overview about the involved vSAN Disk Groups:

A 2-node VMware vSAN Cluster sounds cool, but what about the required witness component? Initially I had the idea to use an old Acer Shuttle PC in combination with ESXi as the third required witness host. But unfortunately I wasn’t able to successfully install ESXi on that hardware. And therefore I’ve done here a quite interesting “hack”: I’m running VMware Workstation 12 on Windows 10, and the Witness Appliance is deployed as a VM on VMware Workstation. This is of course not supported, but it works – with the great help from William Lam.

As you can see from this description, the Management Cluster is not really powerful, and therefore it doesn’t consume that much power. And that’s the reason why the Management Cluster is always up and running in my Home Lab. I’m not shutting it down in any way. One reason is that I’m able to access VMware vSphere all the time, and the other reason is that the Windows Domain Controller VM also runs my VPN Server. And that must be of course also always up and running to be able to access my Home Lab from the road when I’m travelling.

The whole power consumption of the always-on network switches (Cisco Nexus 3064 and 2x TP Link), and the 2 Dell R210 Server is around 300 Watts. The Compute Cluster is only powered-up (through Wake on LAN) when needed. This costs me around 40 euros for power each month which is acceptable.

Compute Stack

And now let’s concentrate on the real beef of my Home Lab – the Compute Stack. The Compute Stack consists of 3 Dual Socket HP Servers.

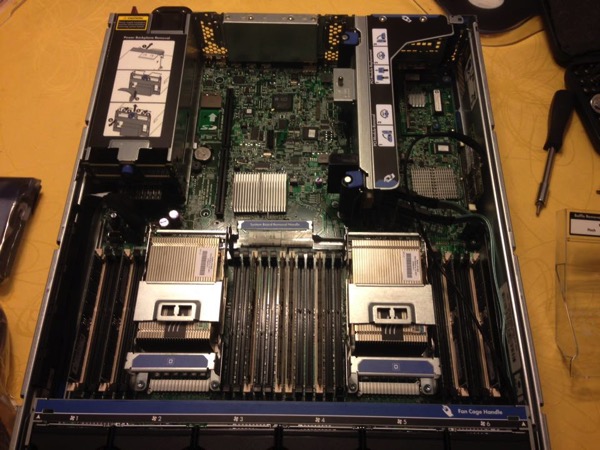

I’m using here an older HP DL 180 G6 Server (which I had already bought back in the year 2011), and 2 new bought HP DL 380 G8 Servers. Each server contains 2 CPU Sockets in a NUMA configuration. This was very important for me, because it gives me the ability to demonstrate NUMA configurations and their side effects, when you have done it wrong. The servers are using the following hardware configuration:

HP DL 180 G6 Server:

- 152 GB RAM (8x 16 GB + 3x 8 GB)

- 2x Intel Xeon E5620 CPU @ 2.4 GHz (8 Cores, 16 Logical Processors)

- Intel X710-DA4FH Nic with 4x 10GBit ports

- Intel Optane 900P 480 GB NVMe Disk

- Samsung PRO 850 1 TB SSD

- 200 GB HDD Disk for the Boot Drive

HP DL 380 G8 Server (2x):

- 128 GB RAM (8x 16 GB)

- 2x Intel Xeon E5-2650 CPU @ 2 GHz (16 Cores, 32 Logical Processors)

- Intel X710-DA4FH Nic with 4x 10GBit ports

- Intel Optane 900P 480 GB NVMe Disk

- Samsung PRO 850 1 TB SSD

- 16 GB SD Card (Boot Drive)

Over the next months I’m also planning to retire the HP DL 180 Server from the Compute Cluster and buy an additional HP DL 380 G8 Server, so that I have the same hardware and the same CPU configuration across all 3 nodes. Currently I’m running the Compute Cluster in a custom EVC mode to make vMotion operations possible. The HP DL 380 G8 Server is also able to support up to 384 GB RAM (24x 16 GB). This would mean that the Compute Cluster can give me more than 1 TB of RAM – nice.

The 3-Node Compute Cluster is based on VMware vSAN. I’m using the Intel Optane 900P 480 GB MVMe Disk for the Caching Tier, and currently 1 Samsung PRO 850 1 TB SSD for the Capacity Tier. The HP DL 380 G8 Server has space for 8 SATA 2.5 SSD drives. Therefore I *could* have an overall storage capacity of 96 TB, if I would fill each slot with a 4 TB Samsung 950 EVO SSD. A local SD Card was chosen as the boot drive on the HP DL 380 G8 Servers, so that I have as much SATA slots as possible available for the Capacity Tier.

In combination with vSAN this would give me a net space of around 50 TB in a RAID 1 configuration with a Failure to Tolerate (FTT) of 1. One drawback of the 3-Node Compute Cluster is that I can’t test a RAID5/6 configuration with a FTT of 2. But anyway, I would never ever recommend this configuration for a serious SQL Server deployment…

In each HP Server I have also installed a Intel X710-DA4FH network card with 4x 10GBit ports. They are wired up with the Cisco Nexus 3064 switch.

I’m currently using 2 ports of the network card: one port for the vSAN traffic, and another port for the vMotion traffic. In addition the Cisco Nexus 3064 has also 4x 40GBit ports. So maybe I’m installing a 40GBit network card in each server in the near future and run the vSAN and/or vMotion traffic through a 40GBit network connection – let’s see…

Let’s talk now about the power consumption of the Compute Cluster. Each HP Server needs around 200 Watts, when I don’t execute a heavy workload. So in sum the whole 3-node Compute Cluster needs 600 Watts- which is already something. It would cost me additionally 80 Euros each month to have the Compute Cluster always up and running. But that’s not really necessary, because I don’t run a 24/7 workload on it.

I’m using the Compute Cluster normally during the days, and also not each day. And therefore I have done the separation between Management Cluster and Compute Cluster. With the up and running Management Cluster (and the available VPN connection), I’m able to start-up the 3 HP Servers with Wake on LAN. And when I’m done with my work/research, I’m just shutting down the 3 physical servers. It’s quite easy and flexible, and saves me some money.

Summary

So now you know about the design of my VMware vSAN powered Home Lab, and that I’m crazy! As I have said in the beginning of this blog posting, I wanted to have a serious Home Lab environment to be able to work with VMware technologies as real as possible. I really like the concept of a SDDC (Software Defined Data Center) and that everything (Computing, Networking, and Storage) is virtualized.

If you want to know more about how to run successfully SQL Server on VMware vSphere, I’m also running an online training from May 7 – 9 about it.

I hope that you have enjoyed this blog posting, and if you have further questions about my setup, please leave a comment below.

Thanks for your time,

-Klaus

11 thoughts on “How I designed my VMware vSAN based Home Lab”

How much did u spend for setting up everything?

Less than I needed for my Boeing 737-700 simulator 😉

Have you upgraded to to vSphere 6.7? I’ve seen others complain that the DL380 Gen8 is not supported.

Hello Shane,

Thanks for your comment.

I will do the upgrade to 6.7 in about 3 weeks.

Thanks,

-Klaus

Did you manage to upgrade to vsphere 6.7 with your current hardware?

Hello,

I have already update successfully to vSphere 6.7 Update 1.

Thanks,

-Klaus

Thanks for showing your home lab setup. Very Nice!

I’m also looking to build a vSan homelab. Have you found any issues (performance, etc.) with using the consumer/non-HCL storage?

Very very nice, This test platform in my dream. 🙁

Hello Klaus, I host a webcast for VMUG, Hello From My Homelab. I would love to interview you. Would this be something you would be interested in doing? Let me know, looking forward to your reply!

Hello Lindy,

That would be great – please contact me through my contact form.

Thanks,

-Klaus

Thanks for posting this, really awesome setup. It gave me a lot of food for thought. Keep us updated with any changes.

Cheers!

-Kent