As you might know I have created over this summer a physical 3-node VMware vSphere home lab, so that I can test-drive VMware vSphere in combination with SQL Server – which is these days a quite interesting combination that I see very often during my various SQL Server consulting engagements. But it was a quite challenging way to get the whole system up and running. In today’s blog posting I want to talk about one specific problem that I encountered in my home lab – CPU incompatibilities – and how to solve them with VMware EVC.

CPU Incompatibilities – what?

Before I go down to the details, I want to give you a brief overview about the hardware that I’m using in my (cheap) VMware home lab. The lab itself consists of 3 (older) physical servers where ESXi is running on:

- HP DL180 G6 (16 Core System, 152 GB RAM, SSD based)

- Dell R210 II (2 Core System, 32 GB RAM, SSD based)

- Dell R210 II (2 Core System, 32 GB RAM, SSD based)

- A cheap 4 port 10 Gbit Ethernet switch (in addition to 24 1 Gbit ports)

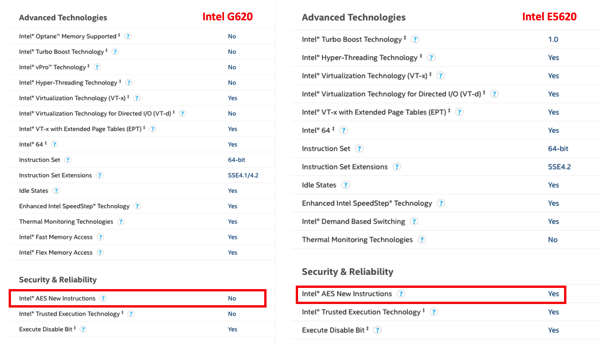

- The HP DL180 G6 Server uses a Intel Xeon E5620 CPU – a Westmere Processor

- Both Dell R210 II Servers are using a Intel Pentium G620 CPU – a Sandy Bridge Processor

VMware EVC

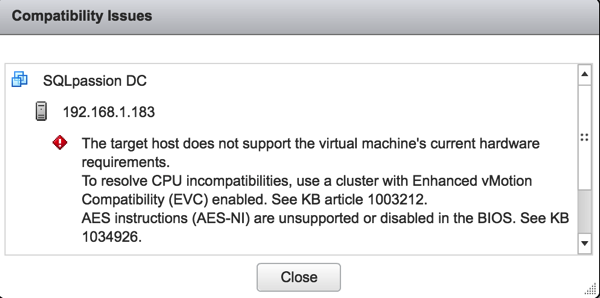

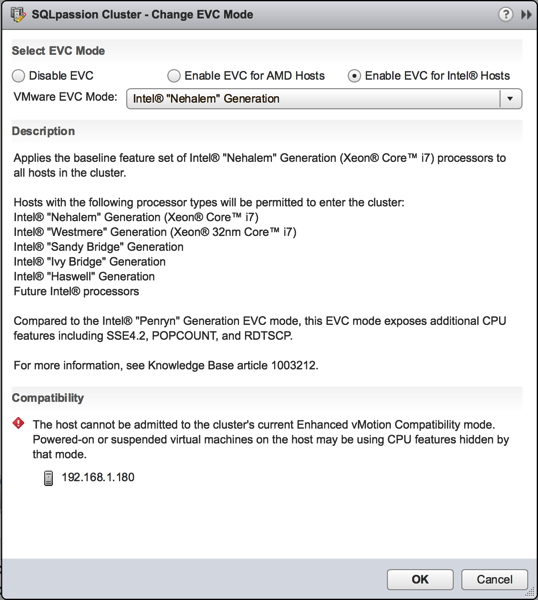

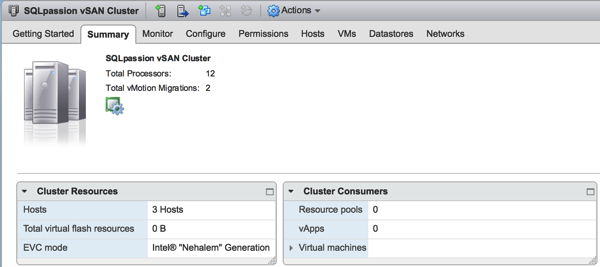

To solve such CPU incompatibility problems, VMware vSphere offers you a functionality called VMware EVC. The idea behind that feature is that you set your cluster to a specific EVC mode, which is the lowest common denominator of all of your CPUs. In my case this is Intel Nehalem. I even can’t use the EVC mode Intel Westmere, because the G620 CPUs are missing the necessary AES-NI instructions. Therefore I tried now to set the VMware EVC Mode of the Cluster to Intel Nehalem. But this doesn’t worked.

The problem is that there was a running VM on the HP server, and therefore VMware vSphere prevented the custom EVC mode. Again, by setting a custom EVC mode you are telling VMware vSphere to use a subset of available CPU instructions – and this can’t work with already running VMs… The problem with the running VM was that this VM is the vCenter VM, and I can’t shut it down, because then I have no vCenter available, and I can’t change the Cluster EVC mode. This is more or less a Chicken-Egg problem:

- I have a running VM that I should shut down to be able to set the Cluster EVC mode

- But I need that running VM, because it hosts vCenter and otherwise I can’t access my Cluster to set the custom EVC mode

- All VMs were shutdown, besides the vCenter VM on the HP server.

- I created a Cluster and only added the 2 Dell servers into the Cluster

- I set at the newly created Cluster the Intel Nehalem EVC mode. This worked now without any problems, because there were no running VMs on these host machines.

- As a next step I have cloned the vCenter VM from the HP server onto one of the Dell servers in the Cluster.

- When the Clone operation was finished, I have stopped the “old” vCenter VM on the HP server.

- Afterwards the vCenter VM Clone was started on the Dell server.

- And finally I have added the 3rd host machine – the HP server – into the Cluster. This also worked now without any problems, because there were again no running VMs on the host anymore.

Summary

In production environments CPU incompatibilities are normally quite rare, because (hopefully) you are using the same CPU models across all your ESXi hosts. But in a home lab it’s quite easy to get into such troubles, when you build your lab step-by-step over the time with different (cheap) available hardware. I hope this blog posting gave you an idea how to solve such problems.

Thanks for your time,

-Klaus