Every time you create a Primary Key constraint in SQL Server, the constraint is (by default) enforced through a Unique Clustered Index. This means that you need to have in that column (or these columns, if you have defined a composite Primary Key) unique values. And as a side effect your table data is physically sorted by that/these column(s). Let’s have again a look at the advantages and disadvantages of Clustered Indexes in SQL Server.

Advantages

One of the biggest advantages of Clustered Tables is that the data is physically sorted by the Clustered Key in your storage subsystem. You can compare a Clustered Table to a traditional phone book: the phone book is clustered/sorted by last name, which means that the last name Aschenbrenner comes before Bauer, and Bauer comes before Meyer. Clustered Tables are therefore completely different from Heap Tables, where you have no physical sorting order.

It’s a really huge benefit that you get from Clustered Tables. Imagine you are searching for a specific record in the table, and the column in the WHERE clause on which you are restricting your data is the Clustered Key. In that case SQL Server will choose in the execution plan a Clustered Index Seek operator. Seek operations are very, very efficient, because SQL Server uses a B-tree structure to find the relevant data. If you want to learn more about the internally used B-tree structure, you can also watch my SQL Server Quickie on that topic. Back in 2010 I also wrote multiple blog postings about that topic.

The complexity of a seek operation is always O(log N). It’s the same when you are searching in a phone book for the name Aschenbrenner. You know that this name can be only at the beginning of the phone book, because the phone book is sorted by this data. So you can avoid a scan of the complete phone book, and SQL Server can avoid a complete scan of the Clustered Index in the leaf level.

As long as you have no index fragmentation in your Clustered Index, you will also get sequential I/O when you are accessing the Clustered Index with a scan operation. Index Fragmentation would mean that the logical and physical sorting orders of your pages in the leaf level are not the same. You can fix index fragmentation through Index Rebuild and Index Reorganize operations.

Whether you have index fragmentation or not depends on your chosen Clustered Key column. As long as you are using an ever increasing value (like INT IDENTITY, or an OrderDate column), records are inserted at the end of the Clustered Index. This means that you are not introducing fragmentation in your index, because SQL Server is only appending data at the end of your Clustered Index. But in some rare cases this approach will not scale indefinitely. Therefore we also have now to talk about the disadvantages that you have with Clustered Indexes.

Disadvantages

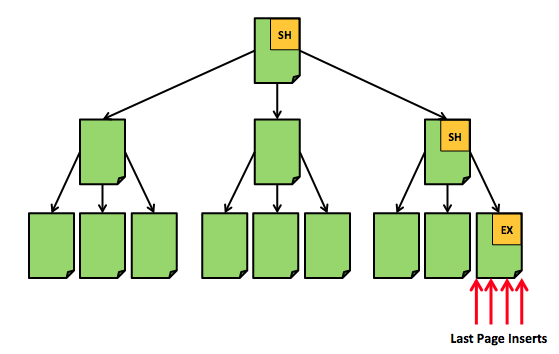

Data inserted only at the end of the Clustered Index can introduce the so-called Last Page Insert Latch Contention, because you have a single hotspot at the end of your Clustered Index, where multiple queries compete against each other, when traversing through the B-tree structure. The following picture shows this phenomenon.

To overcome this problem you can choose a random Clustered Key for your Clustered Index, because then you are distributing the inserted records across multiple different places in the Clustered Index. But a random Clustered Key also introduces so-called hard Page Splits, because SQL Server has to allocate a new data page somewhere within the leaf level of the Clustered Index. Hard page splits will also have a negative impact on the performance of your transaction log, because logging a hard page split is a lot more work than logging a “normal” INSERT (a soft page split) at the end of your Clustered Index.

As a side-effect you are also introducing index fragmentation with a random Clustered Key, because your logical and physical sorting order are not the same anymore. Random I/O will kill the performance of your scan operations on traditional rotational storage, because the disk head must move forward and backward on the platters of your drive when reading the various data pages.

Summary

Clustered Indexes scale very well because internally they use a B-tree structure. SQL Server can make effective use of this structure when performing index seek operations on your table. But choosing the right and correct Clustered Key is an awful job, because you have to think about all the advantages and disadvantages of every scenario (ever increasing value, random value).

For that reason the SQLpassion Online Academy also provides you a 1-hour long training video, where I show you both approaches and about what things you have to be aware of, when choosing your Clustered Key.

Thanks for your time,

-Klaus

2 thoughts on “Clustered Indexes – Advantages & Disadvantages”

The most common mistake regarding clustered indexes that I see is using the tables PK-column for it.

This would be fine for “main” tables as Person or Orders, but not for sub tables that usually have a FK to the main table.

Example: you will rarely query the table OrderPositions by its OrderPositionID (and when you do it, it would be usually the read of only a single record), but you will much more often query all positions of a specific order. So it would (usually) make more sense to cluster the table by the OrderID than by its PK (OrderPositionID).

And because a clustered index should be always UNIQUE (if possible; otherwise SQL Server would add an invisible INT column [uniquifier] to it which contains no usefull data), you could add either the PosNo (if there is such a column) or the OrderPositionID as second field.

Good article (as always) Klaus

Keep the good work!