It happens very often these days: people contact me because they want some help from me in designing their High Availability solution for SQL Server. There are already a lot of people who are interested in deploying AlwaysOn Availability Groups. And this demand will grow further in the future, because Availability Groups will be part of the Standard Edition of SQL Server 2016.

In the first step of every possible customer engagement, I always ask if they want to deploy their High Availability solution on bare metal or in a virtualized environment. And of course a lot of people tell me that everything is virtualized, because it helps them to save money and share hardware resources across multiple virtual machines.

So far, so good. Nothing is wrong with that approach. I really like it. But after this clarification I get really nasty, and I ask my one-million-dollar question:

“On how many physical ESX hosts you are planning to run your High Availability solution?”

The answer to this question is very often quite simple:

“ONE!”

Good-Bye High Availability

Now let’s do a brief recap.

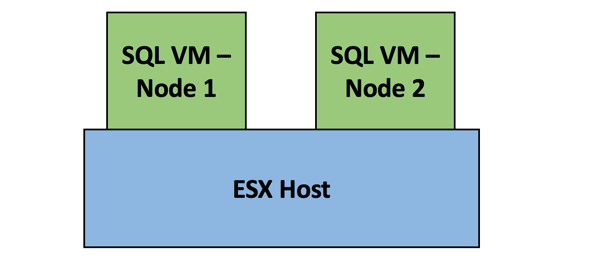

“You want me to make your SQL Server highly available by deploying AlwaysOn Availability Groups, and then you run all the various replicas on ONE physical host? So your solution would look like the following picture.”

“Are you still sure that we are talking about High Availability here? What happens if your physical ESX host is down? In that case your WHOLE “highly available” SQL Server is also down!”

Answer:

“Hmm, yes. We can follow you – somehow. Understood. But our management wants to have a High Availability solution in place for our SQL Server installation. Therefore we want to deploy Availability Groups. And our physical ESX host is always up and running. Don’t worry about that. We need you to help us with our *SQL Server infrastructure*. We are not talking about the virtualization layer!”

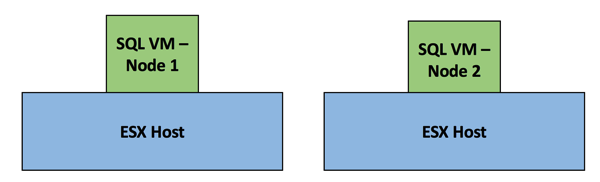

Ok, at that point I’m out of this game, because the whole proposed solution doesn’t make any sense. If you deploy a highly available SQL Server solution, you ALSO have to make sure that the underlying layers are also highly available – including your virtualization layer! If you are using virtualization you need AT LEAST 2 physical hosts, otherwise we are not talking about high availability. Let’s have a look at the following picture.

Now every replica runs on a separate ESX host. When one ESX host is down, it doesn’t matter, because you still have the other host, which runs the other replica of your Availability Group.

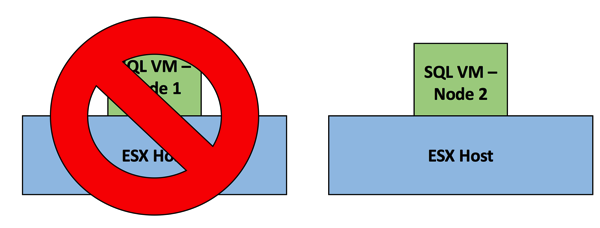

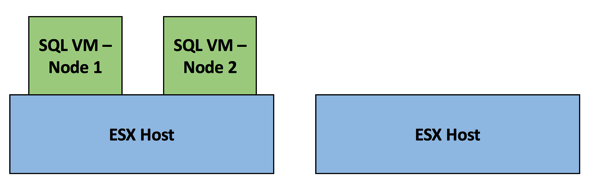

But even with 2 physical ESX hosts, your ESX admin can live-migrate all of your replicas onto the same node – in a way that you don’t notice it.

So you also have to educate your ESX admins in a way that this specific scenario doesn’t occur. Oh, and trust me: I have already seen this many times when I have performed a SQL Server Health Check.

Summary

If you are thinking or implementing a High Availability solution for your SQL Server installation, please do me a favour and also think about all the other layers. Especially your virtualization layer. Running all your replicas on the same physical ESX host is not really a High Availability option. It looks nice, but it doesn’t give you real high availability. As soon as your ESX host is down, your Availability Group is down. Please bear that in mind next time when you design your High Availability solution.

Thanks for your time,

-Klaus

4 thoughts on “How to ruin your High Availability solution by using Virtualization”

Hello Klaus,

in VMware vSphere virtual environment, you have to define “anti-affinity” rules in the DRS engine (Distributed Resource Scheduler) to be sure that 2 AlwaysOn replicas NEVER run on the same physical host.

Hello Andrea,

Thanks for your comment.

As long as this configuration is done, it is fine… 😉

-Klaus

Good article Klaus, thanks. We are migrating our infrascrture to 4 ESXi hosts over the next 4 months. I’ll let you know if we need some help.

Al

Management kicked and screamed when we told them we needed 2 SAN switches for our first SAN because it was going to drive up the cost of the project. We explained how a single enclosure does not an HA solution make. Kicking and screaming they ultimately agreed with us and we got our 2 SAN switches. Our server’s dual SAN paths were meticulously planned out to ensure redundancy between the physical switches.

Six months later management thanked us for our persistence when one of the SAN switches died due to a hardware failure. Had we not insisted on 2 switches we would have suffered a complete outage on many of our tier 1 systems. Instead we suffered slightly degraded I/O performance for a few hours while we waited for the new switch.

Great post, Klaus!