In today’s blog posting I want to talk in more detail about how In-Memory OLTP logs transaction log records into the transaction log. As you might know, In-Memory OLTP offers you 2 durability options for your Memory Optimized Tables:

- SCHEMA_ONLY

- SCHEMA_AND_DATA

I don’t want to talk more about SCHEMA_ONLY today, because with this option no logging happens at all in the transaction log (your data doesn’t survive a SQL Server restart). Today we will concentrate on the durability option SCHEMA_AND_DATA.

SCHEMA_AND_DATA

With the durability option SCHEMA_AND_DATA SQL Server always has to log your transactions into the transaction log, because every In-Memory OLTP transaction must always be durable. It’s the same basic concept as with traditional Disk Based Tables. But writing to the transaction log in In-Memory OLTP is much more optimized than with traditional tables. In-Memory OLTP supports multiple concurrent log streams (which is currently not implemented in SQL Server 2014), and In-Memory OLTP just logs the logical transaction that happened.

What do I mean by logical transaction? Imagine you have a traditional Clustered Table with 5 Non-Clustered Indexes defined on it. If you insert a record into that table, SQL Server has to log the insert into the Clustered Index, and also the 5 additional inserts into the Non-Clustered Indexes. The more Non-Clustered Indexes you define on your table, the more SQL Server has to log. And SQL Server can be only as fast as the transaction log.

Things change with In-Memory OLTP. In-Memory OLTP SQL Server just logs that a logical modification has happened in your transaction. SQL Server does not log the modifications that happened in your Hash- or Range-Indexes. So it’s just one log record that describes the logical INSERT/UPDATE/DELETE statement that has happened. As a result, In-Memory OLTP writes less data to your transaction log, and therefore your transactions can be committed much faster.

Let’s prove it!

I want to show you with a simple example how much data SQL Server writes to your transaction log when you insert 10000 records first into a traditional Disk Based Table and then into a Memory Optimized Table. The following code creates a simple table, and inserts the 10000 records in a while loop. Afterwards I use the (undocumented) system function sys.fn_dblog to look into the transaction log.

-- Create a Disk Based table

CREATE TABLE TestTable_DiskBased

(

Col1 INT NOT NULL PRIMARY KEY,

Col2 VARCHAR(100) NOT NULL INDEX idx_Col2 NONCLUSTERED,

Col3 VARCHAR(100) NOT NULL

)

GO

-- Insert 10000 records into the table

DECLARE @i INT = 0

BEGIN TRANSACTION

WHILE (@i < 10000)

BEGIN

INSERT INTO TestTable_DiskBased VALUES (@i, @i, @i)

SET @i += 1

END

COMMIT

GO

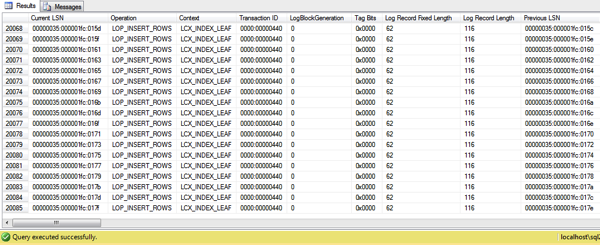

-- SQL Server logged more than 20000 log records, because we have 2 indexes

-- defined on the table (Clustered Index, Non-Clustered Index)

SELECT * FROM sys.fn_dblog(NULL, NULL)

WHERE PartitionId IN

(

SELECT partition_id FROM sys.partitions

WHERE object_id = OBJECT_ID('TestTable_DiskBased')

)

GO

As you can see from the output of the system function, you have a little bit more than 20000 log records. This makes complete sense, because we have 2 indexes defined on that table (1 Clustered Index, 1 Non-Clustered Index).

Now let’s have a look at how the logging changes with a Memory Optimized Table. The following listing shows the code that is necessary to prepare our database for In-Memory OLTP: we just add a new Memory Optimized File Group, and add a container to it.

--Add MEMORY_OPTIMIZED_DATA filegroup to the database. ALTER DATABASE InMemoryOLTP ADD FILEGROUP InMemoryOLTPFileGroup CONTAINS MEMORY_OPTIMIZED_DATA GO USE InMemoryOLTP GO -- Add a new file to the previously created file group ALTER DATABASE InMemoryOLTP ADD FILE ( NAME = N'InMemoryOLTPContainer', FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL12.SQL2014\MSSQL\DATA\InMemoryOLTPContainer' ) TO FILEGROUP [InMemoryOLTPFileGroup] GO

In the next step I create a new Memory Optimized Table. I choose here a bucket count of 16384 on the hash index to avoid possible hash collisions. And in addition I create 2 Range Indexes on the columns Col2 and Col3.

-- Creates a Memory Optimized table CREATE TABLE TestTable_MemoryOptimized ( Col1 INT NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT = 16384), Col2 VARCHAR(100) COLLATE Latin1_General_100_Bin2 NOT NULL INDEX idx_Col2, Col3 VARCHAR(100) COLLATE Latin1_General_100_Bin2 NOT NULL INDEX idx_Col3 ) WITH ( MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA ) GO

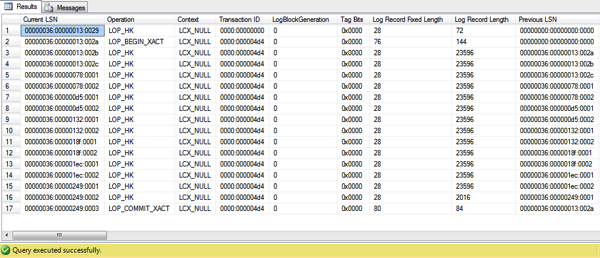

In sum we have 3 indexes on that table (1 Hash Index and 2 Range Indexes). When you then insert 10000 records into the table, a traditional table would generate around 30000 log records – one log record for every insert in every index. But now when you look again at the transaction log, you can see that the 10000 inserts have generated just a few log records – 17 in our case!

The magic happens inside the LOP_HK log record. Inside this specific log record In-Memory OLTP bundles multiple changes to your Memory Optimized Table. You can also break down LOP_HK log records by using the system function sys.fn_dblog_xtp:

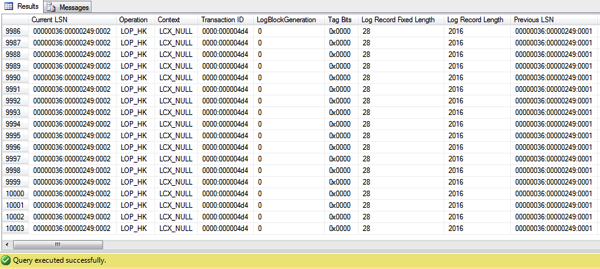

-- Let's look into a LOP_HK log record SELECT * FROM sys.fn_dblog_xtp(NULL, NULL) WHERE [Current LSN] > '00000036:00000013:0028' AND Operation = 'LOP_HK' GO

As you can see from the following picture, In-Memory OLTP has only generated around 10000 LOP_HK log records – one log record for every logical insert that has happened on this table.

Summary

In-Memory OLTP provides you with amazing performance improvements, because it is based on completely new principles like MVCC and Lock-Free Data Structures. In addition it also generates fewer transaction log records, because only logical changes are logged into the transaction log. I hope that I have given you with this blog posting a better understanding how In-Memory OLTP improves your transaction log throughput.

Thanks for your time,

-Klaus