In my last week’s blog posting I talked about how the use of temp tables can cause recompilations in SQL Server. As mentioned at the very end of the blog posting, I want to concentrate today a little bit more on Table Variables, which can avoid the expensive overhead of recompilations. Let’s have a more detailed look at them.

Table Variables

A table variable is always scoped to a batch submitted to SQL Server. When you want to reference a table variable outside of a batch, you will get back an error message. That’s the first big difference compared to temp tables. The following code shows how you can create and use table variables – again in the context of a simple stored procedure.

CREATE PROCEDURE DemonstrateTableVariablesNoRecompiles AS BEGIN DECLARE @tempTable TABLE ( ID INT IDENTITY(1, 1) PRIMARY KEY, FirstName CHAR(4000), LastName CHAR(4000) ) INSERT INTO @TempTable (FirstName, LastName) SELECT TOP 1000 name, name FROM master.dbo.syscolumns SELECT * FROM @TempTable END GO

The nice thing about table variables is that they don’t cause any recompilations. When you execute the stored procedure and when you monitor recompilations with the Extended Event session from last week, you will see that no recompilations are happening.

Why is this possible with table variables? First of all a table variable is just a variable – as the name implies. This means that you don’t change your database schema when you define your table variable. Therefore recompilations based on database schema changes can be avoided. And in addition a table variable has no statistics. Therefore statistics don’t have to be maintained, and the second cause of recompilations just disappears.

Both things sounds great at first, but we will find huge drawbacks when we look further. Let’s have a look at them. A table variable is just a variable – almost! A table variable is still persisted in TempDb! Yes, you read correctly: when you work with a table variable, physical I/O in TempDb is involved. This is very easy to prove with the Dynamic Management View sys.dm_db_session_space_usage, which tracks TempDb usage on a session level. Let’s have a look at the following code:

-- Create a table variable DECLARE @tempTable TABLE ( ID INT IDENTITY(1, 1) PRIMARY KEY, FirstName CHAR(4000), LastName CHAR(4000) ) -- Insert 4 records into the table variable INSERT INTO @tempTable (FirstName, LastName) VALUES ( 'Klaus', 'Aschenbrenner' ), ( 'Philip', 'Aschenbrenner' ), ( 'Klaus', 'Aschenbrenner' ), ( 'Philip', 'Aschenbrenner' ) -- Retrieve the data from the table variable. -- The execution plan estimates 1 row. SELECT * FROM @tempTable GO -- Review the space used in TempDb. -- Our table variable currently needs 5 pages in TempDb. -- The 5 needed pages from the table variable are already marked for deallocation (column "user_objects_dealloc_page_count") SELECT * FROM sys.dm_db_session_space_usage WHERE session_id = @@SPID GO

As you can see from the output from the DMV, the table variable needs 5 pages in TempDb, which were allocated. Because the table variable is already out of scope, these 5 pages are already marked for deallocation. Be aware of that side-effect!

Table variables also have no statistics. Therefore no recompilations happen here. But as a side-effect the Query Optimizer always just estimates 1 row. And that can be very, very bad. Imagine you make a join from your table variable to another table in your database. In that case the Query Optimizer will introduce the Nested Loop Join operator in the execution plan, and will reference the table variable as the outer table, because of the estimation of 1 row. If you return in reality 10000 or even more rows, the whole execution plan isn’t really optimal anymore. Let’s have a look at the following example:

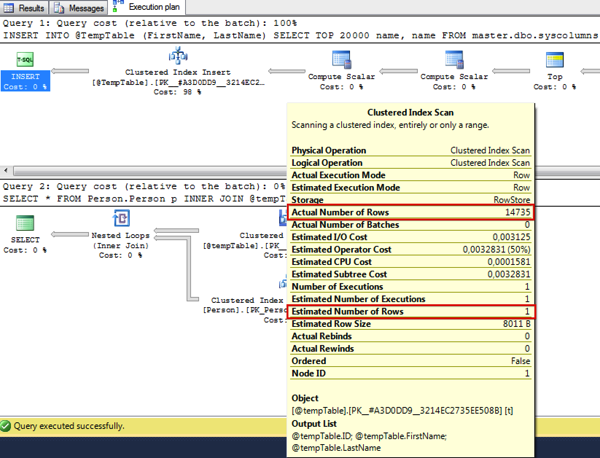

CREATE PROCEDURE BadPerformingQuery AS BEGIN DECLARE @tempTable TABLE ( ID INT IDENTITY(1, 1) PRIMARY KEY, FirstName CHAR(4000), LastName CHAR(4000) ) INSERT INTO @TempTable (FirstName, LastName) SELECT TOP 20000 name, name FROM master.dbo.syscolumns -- The physical Join Operator will be a Nested Loop, -- because Nested Loop is optimized for 1 row in the outer loop. SELECT * FROM Person.Person p INNER JOIN @tempTable t ON t.ID = p.BusinessEntityID END GO

When you have a more detailed look at the Clustered Index Scan operator, you can see that the cardinality estimation is here just 1 row and is nowhere near the actual number of rows returned by the operator.

You can fix that wrong cardinality estimation by using a Statement-Level Recompilation that was introduced back with SQL Server 2005.

-- Use a statement-level recompilation to fix the problem with the -- cardinality estimation. ALTER PROCEDURE BadPerformingQuery AS BEGIN DECLARE @tempTable TABLE ( ID INT IDENTITY(1, 1) PRIMARY KEY, FirstName CHAR(4000), LastName CHAR(4000) ) INSERT INTO @TempTable (FirstName, LastName) SELECT TOP 20000 name, name FROM master.dbo.syscolumns -- The physical Join Operator will be a Nested Loop, -- because Nested Loop is optimized for 1 row in the outer loop. SELECT * FROM Person.Person p INNER JOIN @tempTable t ON t.ID = p.BusinessEntityID OPTION (RECOMPILE) END GO

But this approach is a little bit counter-productive, because you again introduce a recompilation overhead that you wanted to avoid with the use of table variables…

Summary

With table variables you can avoid the overhead of recompilations in SQL Server – with some side-effects. The largest one is the wrong cardinality estimation of just 1 row. Therefore table variables only make sense when you deal with a small amount of rows, because then the wrong cardinality estimation doesn’t really matter and doesn’t affect your performance. But with a larger set of rows it would hurt your performance because of the inefficient execution plan that is generated.

As a general rule-of-thumb you should use temp tables for a large amount of data, and table variables with a small amount of data. But you really have to benchmark it for your own workload, to decide when a temp table and when a table variable makes sense.

Thanks for your time,

-Klaus

5 thoughts on “Recompilations, Part 2”

Once Again, a great post, Klaus.

I think you should also mention that Table Variable, do not allow for parallelism, and that may also have an overhead, when using that approach.

Thanks for the posts.

Best regards

Paw

Hello Paw,

Thanks for your great feedback and your comment.

Yes, a parallel plan isn’t possible with Table Variables – one of the drawbacks. But when you have a huge amount of data (in the case of a parallel plan), you should anyway use a Temp Table.

Thanks,

-Klaus

I wish more people knew about the often devastating limitations and gotchas of table variables. I can count on one hand the number of times at clients since TVs first came out that the primary issue was recompilation blocking that was fixed by replacing temporary tables with table variables. But I cannot count how many times the primary cause of bad performance was caused by using table variables and the issue was fixed by switching to temporary tables. It is at least hundreds of times!!

Hello Kevin,

Thanks for your comment.

I can only agree on that one with you.

I have seen also both extremes in production databases: only temporary tables, and only table variables.

Both approaches on its own can lead to serious performance problems…

Thanks,

-Klaus

Good post! Something we battle with very frequently.

Wasn’t the 1 row estimation fixed in 2012 SP2 however?

http://blogs.msdn.com/b/psssql/archive/2014/08/11/if-you-have-queries-that-use-table-variables-sql-server-2012-sp2-can-help.aspx