Today I have encountered a very simple blog posting where someone was inserting 1 millions of rows into a Hekaton Hash Index of 2048 buckets. Therefore I was running some tests how Hash Collisions in the Hash Buckets will affect the throughput of your Hekaton workload – and the results are very, very interesting. In the first step I want to simple describe what a Hash Collision is.

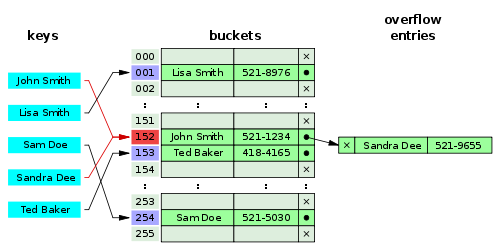

As you might know (hopefully), Hekaton tables are implemented as Hash Indexes in SQL Server 2014. Wikipedia has a very nice page about the basics of Hash Tables in general, which are used as the basic foundation of Hash Indexes. In a nutshell, SQL Server is applying a Hash Function to the key value of your Hash Index, and the result of that Hash Function determines in which Hash Bucket your row will be stored finally. If multiple key values are hashed to the same value, SQL Server inserts multiple rows in that specific Hash Bucket, and just chains the multiple entries in that Hash Bucket. See the following picture (taken from Wikipedia):

As you can see from this picture, the keys “John Smith” and “Sandra Dee” are hashed to the same bucket – in our case, the bucket 152. This means that both rows are stored in the same Hash Bucket, which affects the INSERT performance, and also the SELECT performance of your queries. During the INSERT, SQL Server has to maintain the linked list, and during SELECT queries SQL Server has to scan the linked list.

After that introduction, let’s demonstrate the performance impact of Hash Collisions with a simple example. Let’s create a database with a simple Hekaton table:

-- Create new database CREATE DATABASE HashCollisions GO --Add MEMORY_OPTIMIZED_DATA filegroup to the database. ALTER DATABASE HashCollisions ADD FILEGROUP HekatonFileGroup CONTAINS MEMORY_OPTIMIZED_DATA GO USE HashCollisions GO -- Add a new file to the previous created file group ALTER DATABASE HashCollisions ADD FILE ( NAME = N'HekatonContainer', FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\DATA\HashCollisionsContainer' ) TO FILEGROUP [HekatonFileGroup] GO -- Create a simple table CREATE TABLE TestTable ( Col1 INT NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT = 1048576), Col2 INT NOT NULL, Col3 INT NOT NULL ) WITH ( MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_ONLY ) GO

As you can see from the source code, I’m using here just a Hash Bucket count of 1024 – not too many buckets, when we insert afterwards 1 million of records. In the next step I’m also creating a native compiled Stored Procedure, so that I can go wicked fast with Hekaton:

-- Create a native compiled Stored Procedure

CREATE PROCEDURE InsertTestData

WITH

NATIVE_COMPILATION,

SCHEMABINDING,

EXECUTE AS OWNER

AS

BEGIN

ATOMIC WITH

(

TRANSACTION

ISOLATION LEVEL = SNAPSHOT,

LANGUAGE = 'us_english'

)

DECLARE @i INT = 0

WHILE @i < 1000000

BEGIN

INSERT INTO dbo.TestTable (Col1, Col2, Col3) VALUES (@i,@i, @i)

SET @i += 1

END

END

GO

As you can see, I’m just inserting here 1 million of records in a simple loop. When I’m running this Stored Procedure in a VM with 1 CPU, and 2 GB RAM, the execution time is around 24 seconds - awefully slow. When you are doubling the Hash Bucket count over and over again till you have 1048576 buckets, you can see very nicely that the performance increases as you create more and more Hash Buckets. With 1048576 buckets, the execution of the same Stored Procedure just takes around 600ms! This is huge differentiation compared to the first test run with just 1024 buckets. You can also check with the DMV sys.dm_db_xtp_hash_index_stats how many buckets you have in your Hash Index.

So the moral of this story? Choose your Hash Bucket count for Hekaton Hash Indexes *very, very* wisely, as they are really impacting the performance of your workload! The optimal number of buckets is just the number of distinct values that you are storing in the Hash Index - with some additional free space, just to be on the safe side 🙂 You also have to make sure not to choose the bucket count too high, because otherwise you are just waisting memory. So again - as almost everything in SQL Server - it depends on your workload, besides Database Shrinks 😉

Thanks for reading

-Klaus