My complete last week was dedicated to XTP (Extreme Transaction Processing), better known as Hekaton, that is introduced with SQL Server 2014. My main goal of the week was to get a better understanding of Hekaton, the current limitations, and the scalability of this amazing new In-Memory technology. This blog posting covers the following things about Hekaton:

- Main Overview

- Scalability

- Limitations

1. Main Overview

Let’s start with a brief overview of XTP. The main goal of an In-Memory technology like XTP is very easy: use the hardware of our current server systems as efficient as possible. When we look nowadays at the hardware of modern server systems you can see the following problems/limitations:

- Traditional (rotational) storage is very slow, and enterprise ready SSD storage is very expensive. On the other hand main memory (RAM) is very cheap, it just costs you a few 100 dollars to hit the 64 GB limit of the Standard Edition of SQL Server.

- The CPU speed is not scaling anymore. We are currently stuck at around 3 – 4 GHz, anything more is not possible (expect when you are over-clocking your systems)

- Traditional RDBMS systems can’t scale linearly, mainly because of the internal Locking, Blocking, and Latching (In-Memory locking of data structures, when they are accessed for reading and writing) mechanism

So to overcome these limitations you would need a technology that:

- Uses the RAM to store data entirely In-Memory to overcome the speed limits of traditional rotational hard-disks

- Executes RDBMS queries as fast as possible and with the least possible amount of CPU instructions to use the current limited clock speed of CPUs as cost-effective as possible

- Avoids entirely Locking/Blocking, and Latching when executing a Read/Write workload against your RDMBS

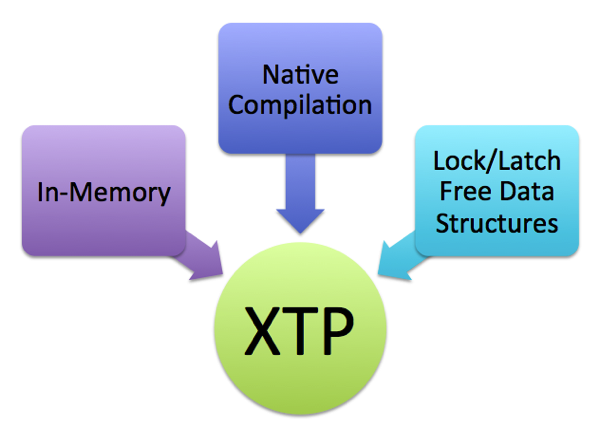

These 3 points are just the 3 main pillars of Extreme Transaction Processing in SQL Server 2014:

With XTP you are able to cache complete tables in in memory (so-called Memory Optimized Tables), Stored Procedures can be compiled down to native C code, and for Memory Optimized Tables the complete Locking/Blocking, and Latching mechanism disappears, because XTP is based on the principles of MVCC (Multi-Version Concurrency Control). Let’s have a more detailed look on those 3 pillars.

In-Memory Storage

RAM for server systems is incredibly cheap. You just need a few hundred dollars to equip your server with 64 GB, and you have already hit the limit that can be used with the Standard Edition of SQL Server. Therefore XTP tables (In-Memory Optimized Tables) are completely stored in RAM. From a SQL Server perspective the whole data of the In-Memory Optimized tables live in a FILESTREAM File Group, and during the startup of SQL Server they read the data from the file group, and rebuild all your indexes on the fly.

This has also a huge impact on your RTO (Recovery Time Objective), because your database is only in the ONLINE state, as soon as ALL your indexes are rebuild during startup. The speed of the storage system where your FILESTREAM File Group is stored, has therefore a direct influence on your RTO. For that reason you can also place multiple containers inside the FILESTREAM File Group, so that you can spread the I/O during startup over multiple storage systems to get your database as fast as possible into the ONLINE state.

In the CTP1 XTP only supports so-called Hash-Indexes, which are stored entirely in Hash Tables in memory. SQL Server is currently able to Seek and Scan Hash Indexes. Beginning with the CTP2 Microsoft will also introduce so-called Range Indexes, where you can also make Range Queries very, very effective. Range Indexes are based on the principles of a so-called Bw-Tree.

Every In-Memory Optimized table is also compiled down to native C code. For every table you get one DLL, that is compiled through cl.exe (the Microsoft C Compiler, shipped with SQL Server 2014). The generated DLL is afterwards loaded into the process space of sqlservr.exe and can be seen in sys.dm_os_loaded_modules. The compilation itself is done in a separate process, which means you can see cl.exe in Task Manager as long as you are fast enough and catch it 🙂 The following listing shows a typical C code that describes your table.

struct hkt_277576027

{

struct HkSixteenByteData hkc_1;

__int64 hkc_5;

long hkc_2;

long hkc_3;

long hkc_4;

};

struct hkis_27757602700002

{

struct HkSixteenByteData hkc_1;

};

struct hkif_27757602700002

{

struct HkSixteenByteData hkc_1;

};

__int64 CompareSKeyToRow_27757602700002(

struct HkSearchKey const* hkArg0,

struct HkRow const* hkArg1)

{

struct hkis_27757602700002* arg0 = ((struct hkis_27757602700002*)hkArg0);

struct hkt_277576027* arg1 = ((struct hkt_277576027*)hkArg1);

__int64 ret;

ret = (CompareKeys_guid((arg0->hkc_1), (arg1->hkc_1)));

return ret;

}

__int64 CompareRowToRow_27757602700002(

struct HkRow const* hkArg0,

struct HkRow const* hkArg1)

{

struct hkt_277576027* arg0 = ((struct hkt_277576027*)hkArg0);

struct hkt_277576027* arg1 = ((struct hkt_277576027*)hkArg1);

__int64 ret;

ret = (CompareKeys_guid((arg0->hkc_1), (arg1->hkc_1)));

return ret;

}

As you can see, the code itself is not very intuitive, but you can see how your table structure is described through the use of a struct in C. The nice thing about XTP is the complete natural integration of In-Memory Optimized Tables into the rest of the relational engine of SQL Server. So you can query these tables from traditional T-SQL Code, the whole story about Backup/Restore just works, and you also have the complete integration into the HA/DR technologies – Microsoft has done here an amazing amount of work in the area of integration. Besides the In-Memory storage, In-Memory Optimized tables are also avoiding completely Locking/Blocking, and Latching because they are based on the principles of MVCC. We will talk about that later in the section about Lock/Latch Free Data Structures.

The most important thing that you have to be aware of is the fact that you should only move your most performance critical tables to memory, NOT YOUR COMPLETE database! We will talk later in the section about Scalability about use cases where XTP make sense. Normally you have 95% of your database stored with traditional disk based tables, and the remaining 5% are stored with In-Memory Optimized Tables.

Native Compilation

With the In-Memory storage of table data Microsoft addresses the first problem of our current hardware systems: traditional, rotational storage is just slow. The second problem that needs to be addressed is the fact that the clock speed of processors is currently stuck at 3 – 4 GHz. We can’t go further up, because of the high heating that would be introduced. Therefore the current clock cycles must be managed as effectively as possible. This is a huge problem with the current implementation of T-SQL, because T-SQL is just an interpreted language.

During Query Optimization the Query Optimizer of SQL Server produces a so-called Query Tree, and that Query Tree is interpreted during runtime from the top operator down to all siblings. Paul White describes that behavior in an awesome blog posting. This introduces a massive amount of additional CPU instructions that must be executed for every executed Execution Plan in SQL Server. Additionally every operator (a so-called Iterator) is implemented as a C++ class, which also means that so-called Virtual Function Calls are used when executing the various operators. Virtual Function Calls again are very expensive regarding the CPU instructions that are needed for execution. In summary, an Execution Plan that is interpreted during runtime, produces a huge amount of CPU instructions, which means the current CPUs are not as effective as possible. You are just waisting valuable CPU cycles, that could spend otherwise in a more better way and improve the throughput of your workload.

Because of these reasons and limitations inside the Query Engine, SQL Server introduces with XTP so-called Native Compiled Stored Procedures. The idea behind it is very simple: the whole body of a Stored Procedure is just compiled down to native C code, and the result is again a DLL that is loaded into the process space of sqlservr.exe. Therefore you have no interpretation during runtime, and Virtual Function Calls are also eliminated completely. The result is that you can do the same amount of work with fewer CPU instructions, which means the throughput of your workload will be higher, because more work can be done with the available CPU cycles.

During TechEd North America 2013 indicated that they were able to reduce for some specific Stored Procedures the needed CPU instructions from 1.000.000 down to around 40.000. Imagine that performance gain: that’s an improvement of 25x! But as you will see later when we talk about the current limitations in XTP, this improvement is not for free… The following listing shows a typical C code that was generated for a simple Stored Procedure.

HRESULT hkp_309576141(

struct HkProcContext* context,

union HkValue valueArray[],

unsigned char* nullArray)

{

unsigned long yc = 0;

long var_2 = (-2147483647 - 1);

unsigned char var_isnull_2 = 1;

HRESULT hr = 0;

{

var_2 = 0;

var_isnull_2 = 0;

}

yc = (yc + 1);

{

while (1)

{

unsigned char result_7;

unsigned char result_isnull_7;

result_7 = 0;

result_isnull_7 = 0;

if ((! var_isnull_2))

{

result_7 = (var_2 < 10000);

}

else

{

result_isnull_7 = 1;

}

if ((result_isnull_7 || (! result_7)))

{

goto l_5;

}

hr = (YieldCheck(context, yc, 18));

if ((FAILED(hr)))

{

goto l_1;

}

yc = 0;

{

long expr_9;

long expr_10;

long expr_11;

__int64 expr_12;

struct hkt_277576027* rec2_17 = 0;

unsigned char freeRow_17 = 0;

short rowLength;

static wchar_t const hkl_18[] =

{

73,

78,

83,

69,

82,

84,

};

static wchar_t const hkl_19[] =

{

91,

79,

114,

100,

101,

114,

115,

93,

};

static wchar_t const hkl_20[] =

{

91,

79,

114,

100,

101,

114,

73,

68,

93,

};

static wchar_t const hkl_21[] =

{

73,

78,

83,

69,

82,

84,

};

static wchar_t const hkl_22[] =

{

91,

79,

114,

100,

101,

114,

115,

93,

};

static wchar_t const hkl_23[] =

{

91,

67,

117,

115,

116,

111,

109,

101,

114,

73,

68,

93,

};

static wchar_t const hkl_24[] =

{

73,

78,

83,

69,

82,

84,

};

static wchar_t const hkl_25[] =

{

91,

79,

114,

100,

101,

114,

115,

93,

};

static wchar_t const hkl_26[] =

{

91,

80,

114,

111,

100,

117,

99,

116,

73,

68,

93,

};

static wchar_t const hkl_27[] =

{

73,

78,

83,

69,

82,

84,

};

static wchar_t const hkl_28[] =

{

91,

79,

114,

100,

101,

114,

115,

93,

};

static wchar_t const hkl_29[] =

{

91,

81,

117,

97,

110,

116,

105,

116,

121,

93,

};

static wchar_t const hkl_30[] =

{

73,

78,

83,

69,

82,

84,

};

static wchar_t const hkl_31[] =

{

91,

79,

114,

100,

101,

114,

115,

93,

};

static wchar_t const hkl_32[] =

{

91,

80,

114,

105,

99,

101,

93,

};

goto l_16;

l_16:;

expr_9 = 1;

expr_10 = 1;

expr_11 = 1;

expr_12 = 2045;

goto l_15;

l_15:;

rowLength = sizeof(struct hkt_277576027);

hr = (HkRowAlloc((context->Transaction), (Tables[0]), rowLength, ((struct HkRow**)(&rec2_17))));

if ((FAILED(hr)))

{

goto l_8;

}

freeRow_17 = 1;

if ((! (nullArray[1])))

{

(rec2_17->hkc_1) = ((valueArray[1]).SixteenByteData);

}

else

{

hr = -2113929186;

if ((FAILED(hr)))

{

{

CreateError((context->ErrorObject), hr, 5, 18, hkl_20, 16, hkl_19, hkl_18);

}

if ((FAILED(hr)))

{

goto l_8;

}

}

}

(rec2_17->hkc_2) = expr_9;

(rec2_17->hkc_3) = expr_10;

(rec2_17->hkc_4) = expr_11;

(rec2_17->hkc_5) = expr_12;

freeRow_17 = 0;

hr = (HkTableInsert((Tables[0]), (context->Transaction), ((struct HkRow*)rec2_17)));

if ((FAILED(hr)))

{

goto l_8;

}

goto l_13;

l_13:;

goto l_14;

l_14:;

hr = (HkRefreshStatementId((context->Transaction)));

if ((FAILED(hr)))

{

goto l_8;

}

l_8:;

if ((FAILED(hr)))

{

if (freeRow_17)

{

HkTableReleaseUnusedRow(((struct HkRow*)rec2_17), (Tables[0]), (context->Transaction));

}

SetLineNumberForError((context->ErrorObject), 18);

goto l_1;

}

}

yc = (yc + 1);

{

__int64 temp_34;

if ((! var_isnull_2))

{

temp_34 = (((__int64)var_2) + ((__int64)1));

if ((temp_34 < (-2147483647 - 1)))

{

hr = -2113929211;

{

hr = (CreateError((context->ErrorObject), hr, 2, 23, 0));

}

if ((FAILED(hr)))

{

goto l_33;

}

}

if ((temp_34 > 2147483647))

{

hr = -2113929212;

{

hr = (CreateError((context->ErrorObject), hr, 2, 23, 0));

}

if ((FAILED(hr)))

{

goto l_33;

}

}

var_2 = ((long)temp_34);

var_isnull_2 = 0;

}

else

{

var_isnull_2 = 1;

}

l_33:;

if ((FAILED(hr)))

{

SetLineNumberForError((context->ErrorObject), 28);

goto l_1;

}

}

yc = (yc + 1);

}

l_5:;

}

yc = (yc + 1);

((valueArray[0]).SignedIntData) = 0;

l_1:;

return hr;

}

As you can see there are a lot of GOTO statements, which leads to a nice spaghetti code. But that’s a different story… 🙂

Lock/Latch Free Data Structures

When we have talked earlier about the In-Memory Storage of data in XTP, I have also said that SQL Server implements for In-Memory Optimized Tables Lock and Latch Free Data Structures. This just means that there is no waiting because of Locks and Latches involved when you want to read and write your data. A traditional RDBMS like SQL Server always acquires Exclusive Locks (X) for write operations, and Shared Locks (S) for read operations. Both locks are incompatible to each other.

This means readers are blocking writers, and writers are blocking readers. How long Shared Locks are hold are controlled through the various Transaction Isolation Levels. This approach is called Pessimistic Concurrency. With the release of SQL Server 2005 Microsoft also introduced a new concurrency model: Optimistic Concurrency. With Optimistic Concurrency readers are not acquiring Shared Locks anymore. They are reading their data transparently from the Version Store that is persisted in TempDb.

With the new Isolation Level Read Committed Snapshot Isolation (RCSI) you are getting back the version of the record which was valid at the start of your statement, and with the Isolation Level Snapshot Isolation you are getting back the version of the record which was valid at the start of your transactions, which means you get Repeatable Reads in Snapshot Isolation.

Setting one of these new Isolation Levels can have a huge positive impact on the throughput of your workload. But there are still problems that must be solved:

- Writers are still acquiring Exclusive Locks, which means parallel Write Operations are still blocking each other.

- When accessing data structures in memory (Data Pages, Index Pages), these structures must be latched, which means they can be only accessed Single-Threaded. That’s a traditional Multi-Threading Concurrency issue that has to be solved this way.

2. Scalability

By now you have a general idea about the main concepts and reasons behind XTP, but the big question is, in which scenarios XTP makes sense. In my opinion XTP is NOT a technology that has to be deployed everywhere. You need a specific use case where XTP makes sense. And trust me: most of the current SQL Server problems that we are facing today, are just Indexing problems, or problems with bad configured hardware (especially in the area of SANs).

I would NEVER EVER recommend moving to XTP, when you are facing such a problem! Always analyze the root cause, and try to resolve that one in the first step. XTP should be your last resort, because when you are using and implementing parts of your database with XTP, you have to think completely different. And there are a huge amount of different limitations that you have to live with. XTP is wicked fast (I would say *really damm fast*), but it’s not an all-time wonder, which can be applied everywhere 🙂

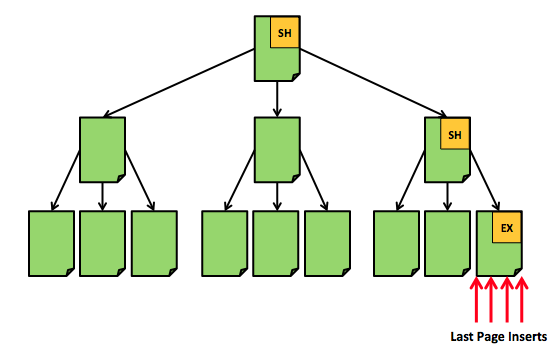

Microsoft positions XTP mainly for overcoming Latch Contention problems. As you have already seen earlier, Latching occurs all the time in memory, when you are accessing pages for read and write activities. When your workload goes up and up and up, at some point in time you introduce Latch Contention because of the Single-Threaded access to those pages in memory. The most traditional example here is the so-called Last Page Insert Latch Contention. If you want to learn more about removing Latch Contention in TempDb, I suggest watching my SQL Server Quickie about it.

This problem is very easy to reproduce: just follow the best practice and create a Clustered Key which is ever increasing to avoid hard Page Splits in your Clustered Index. Your workload will not scale – trust me! The problem here is that you have during INSERT statements a single hot-spot in your Clustered Index – the last page. The following picture gives you can overview of that specific problem.

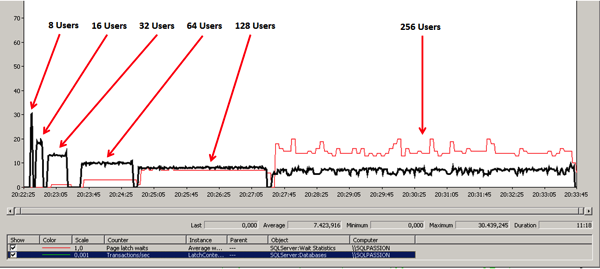

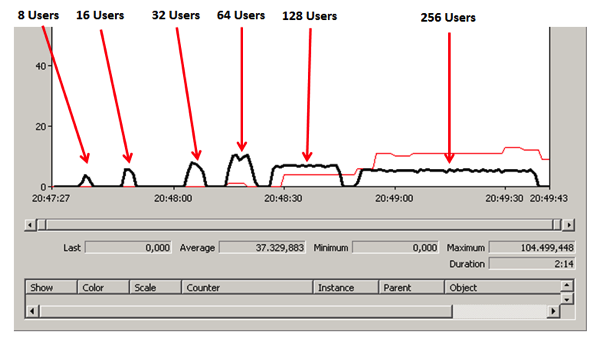

As you can see from the picture, SQL Server has to traverse the right hand side of the Clustered Index from the Index Root Page down to the Leaf Level to insert a new record on the trailing edge of the Clustered Index. So you have a Single-Threaded access to the page in the Leaf Level, which means Single-Thread INSERT operations. This will hurt your performance massively! The following picture shows a simple INSERT statement in table with an INT IDENTITY column (ever increasing, leads to the Last Page Latch Contention!) that I have executed with ostress.exe (part of the RML Utilities from Microsoft) with a different amount of users on a 16 core machine (HP DL180 G6, 2 Quad-Core Sockets with HT enabled, 24 GB RAM, OCZ RevoDrive SSD):

As you can see in the picture, the throughput of the workload decreases as soon as you add additional users, and your Latch Waits just increases – traditional Last Page Insert Latch Contention with an ever increasing value. The contention occurs when accessing Index- and Data-Pages in memory, because of the Latching.

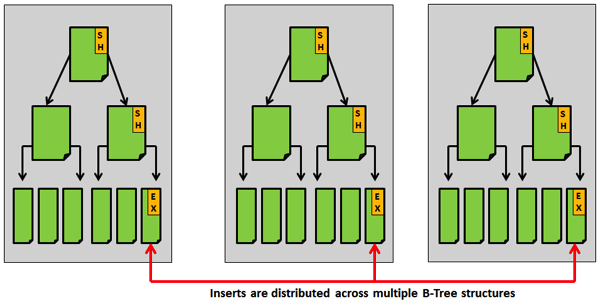

There are several ways to overcome the Last Page Insert Latch Contention:

- Use a random Clustered Key, like a UNIQUEIDENTIFIER to distribute the INSERTs across the whole Leaf Level of the Clustered Index

- Implement Hash Partitioning

- SCHEMA_AND_DATA

- SCHEMA_ONLY

- ASP.NET Session State Database

- ETL Scenarios

3. Limitations

By now everything was great about XTP, and it seems to be a great solution to some specific problems in SQL Server. But XTP has a very high price – a long list with limitations, especially with the CTP1. Here we go with the things that I have found out over the last week (of course there is more documented in Books Online, when you read through everything):

- Differential Backups are not working

- The row size is limited to 8kb, but there are solutions to overcome this limitation: Project Hekaton and BLOBs

- Truncating Memory-Optimized Tables is not supported

- NEWID() is not yet implemented

- Memory-Optimized Tables that are deployed with SCHEMA_AND_DATA need a Primary Key

- ALTER TABLE/ALTER PROCEDURE is not supported

- Foreign-Keys are not supported

- LOB Data Types are not supported

- No recompilations of Native Compiled Stored Procedure. This can lead to badly performing queries due to changed Statistics

- You can’t access traditional disk-based tables in Memory-Optimized Stored Procedures

- The whole table definition must be described in one CREATE TABLE DDL statement (incl. Indexes, Constraints)

- You can’t insert data from a different DB directly into a Memory-Optimized Table, you need a intermediary Staging Table. That’s a huge bummer for the above mentioned ETL scenarios

- …

Don’t ask me how this was possible, I had also not the chance to reproduce it…

Conclusion

XTP is really a wicked fast new technology in SQL Server. The only thing that you have to be aware of is the fact that you need an amazingly fast storage for your Transaction Log, and that it is very easy to hit other bottlenecks in your system, as soon as you deploy a solution based on XTP. I hope that I gave you with this blog posting a good introduction for XTP, and I hope you will enjoy as much as I have done it over the last week.

Thanks for reading

-Klaus

8 thoughts on “Extreme Transaction Processing (XTP, Hekaton) – the solution to everything?”

Very nice Klaus, thank you very much!

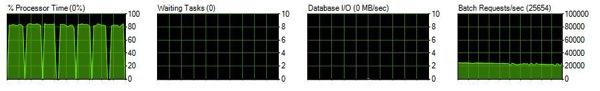

Rocking explanation. Is possible to get scripts for used for Insert test to get 25K batch req/sec?

Hello,

I have just used a simple INSERT statement into a Memory-Optimized table (SCHEMA_ONLY) with a UNIQUEIDENTIFIER Primary Key, and stress-tested it with ostress.exe (part of the RML Utilities), until my CPU was at 100%.

Thanks

-Klaus

Thanks a lot Klaus. I used Schema and Data DURABILITY. Will is make any difference?

Klaus, I just did some test on simple insert on Memory Optimized tables of 1 million rows and observed Batch request/sec not going beyond 1,500.

Am running my test program in 4GB 4Core box. Any help to improvise further.

Hello,

I have used only Schema Durability, because with Schema & Data you are hitting immediately the limits of your Transaction Log.

Regarding your 1,500 Batch Requests/sec:

I have run my tests in a VM with 20 GB RAM, 8 Cores, and everything was stored on a PCI-E based drive. Your hardware really matters here 🙂

Thanks

-Klaus

Got it Klaus. Thx for your info.

Thanks a lot for sharing internals of SQL Server its helping us lot to understand internals.

Worth to say thanks again… 🙂

Thanks for the post, great content 🙂

Hoping that the RTM version allows insert data from SSIS to Memory-Optimized-Tabled (SCHEMA_ONLY) … that would make XTP viable to support ETL staging tables

If not other use case i can see on analyitc workloads is as backend for drillthroughs from SSRS|Excel

Comments are closed.