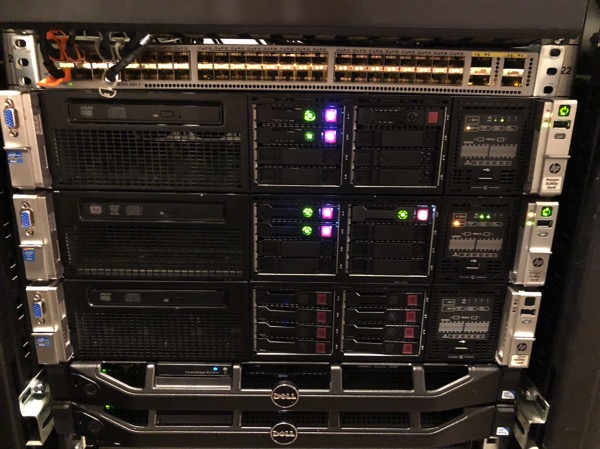

A few days ago I have replaced in my vSAN enabled vSphere Cluster an ESXi Host in preparation for the upcoming migration to vSphere 6.7. As you know from my Home Lab deployment, my vSAN Compute Cluster consists of 3 HP Proliant Hosts:

- esx1.sqlpassion.com: HP DL380 G8 with Intel Xeon E5-2650 CPUs

- esx2.sqlpassion.com: HP DL380 G8 with Intel Xeon E5-2650 CPUs

- esx3.sqlpassion.com: HP DL180 G6 with Intel Xeon E5620 CPUs

Unfortunately the Intel Xeon E5620 CPU is not compatible with vSphere 6.7 anymore, and therefore I have decided to replace the HP DL180 G6 Server in my vSAN Cluster with a HP DL 380 G8 Server.

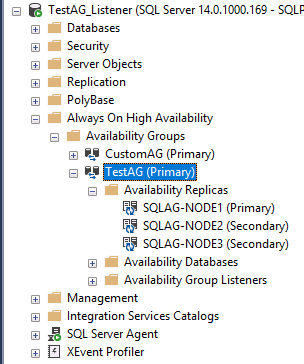

In this blog posting I want to show you now the necessary steps to replace a host in a vSAN Cluster without any downtime of your Virtual Machines, which are stored in the vSAN Datastore. In my case I was running a database OLTP workload across a SQL Server Availability Groups with 3 replicas.

Entering Maintenance Mode

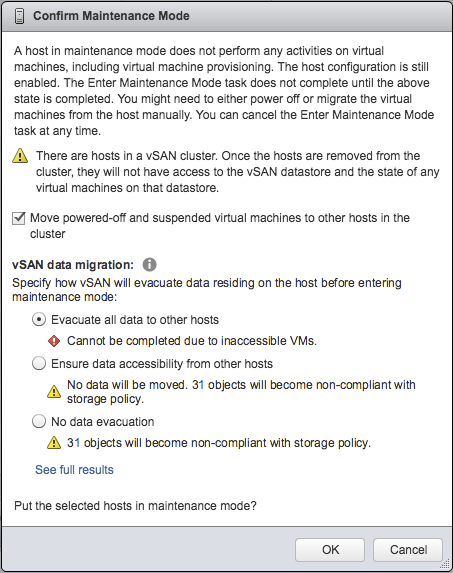

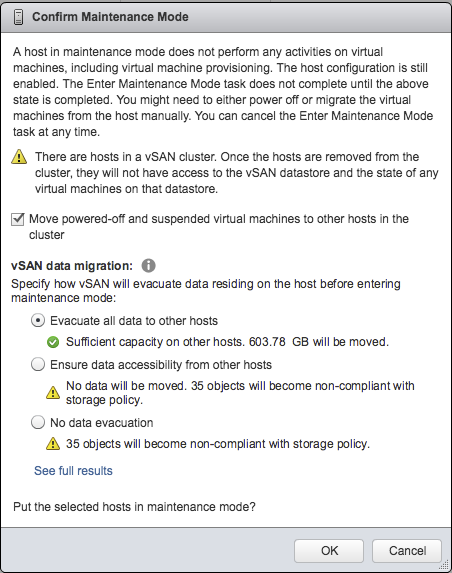

Before I entered the Maintenance Mode on esx3.sqlpassion.com I had performed a vMotion operation and moved all running Virtual Machines away from esx3.sqlpassion.com. In my case I have migrated the third SQL Server Availabilty Group Replica to esx2.sqlpassion.com. Afterwards you are able to enter the Maintenance Mode on the evacuated host. If this host is in a vSAN enabled Cluster, you must also specify how vSAN will evacuate data on that host. As you can see from the following picture, you have here 3 different options, which are described in this blog posting in more detail:

- Evacuate all data to other hosts

- Ensure data accessibility from other hosts

- No data evacuation

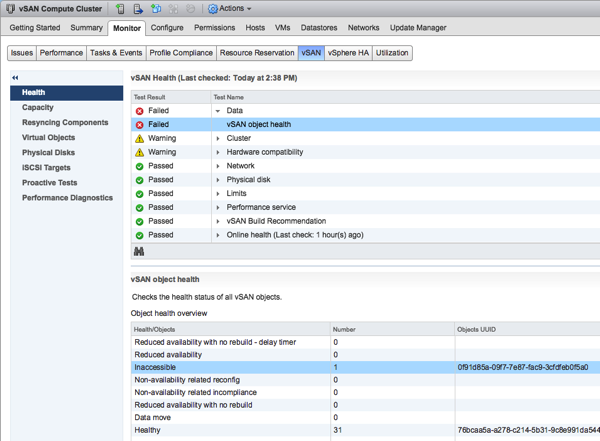

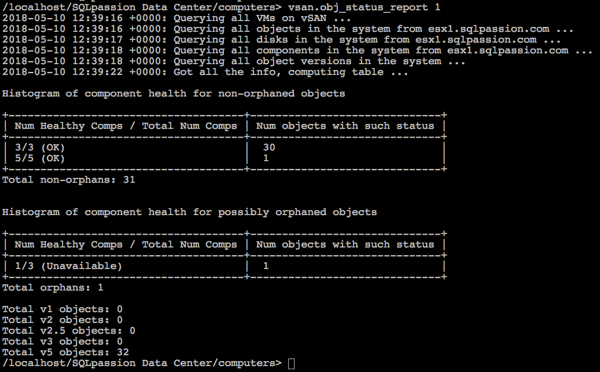

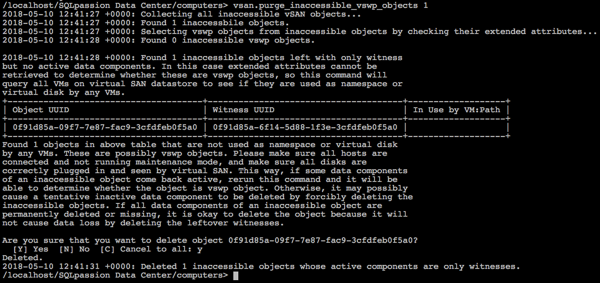

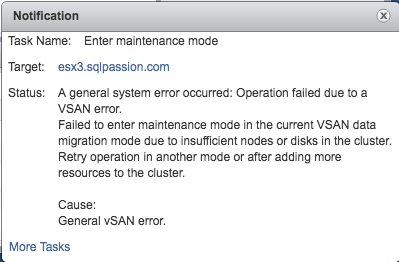

Unfortunately I was not able to enter the Maintenance Mode on esx3.sqlpassion.com, because I had some problems with inaccessible objects in my vSAN Datastore.

After some research I come across a blog posting which showed how you can use RVC to fix that issue.

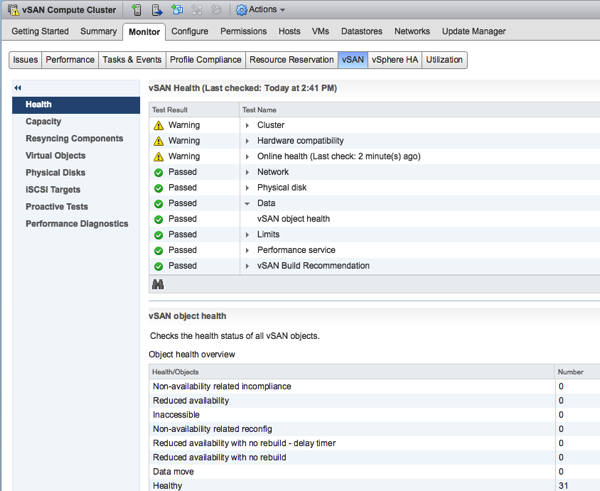

After I had fixed the problem with the inaccessible object in the vSAN Datastore, I was finally able to enter Maintenance Mode on esx3.sqlpassion.com.

My initial goal was here to fully evacuate esx3.sqlpassion.com through the first option “Evacuate all data to other hosts”. Unfortunately you need at least 4 Hosts in your vSAN Cluster to use that option. So this option was a no-go in my scenario, because it was just not possible.

Therefore I have used the second option “Ensure data accessibility from other hosts”. In my case vSAN had to move here around 300 GB of data to my other remaining hosts. As you can see from the following picture, it took me around almost an hour until esx3.sqlpassion.com entered successfully the Maintenance Mode. Keep these times in mind if you are dealing with a larger vSAN Datastore!

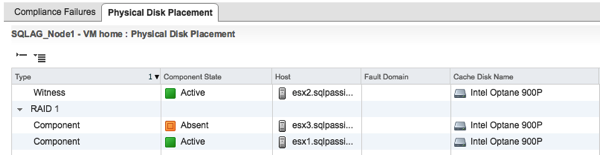

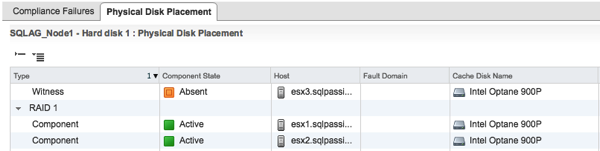

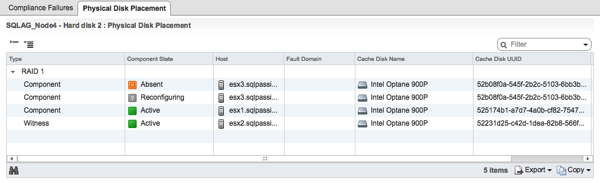

As soon as esx3.sqlpassion.com entered the Maintenance Mode, I had temporarily lost my FTT Policy of 1 (Failure to Tolerate), because esx3.sqlpassion.com doesn’t contribute to the vSAN Datastore anymore.

In my case some RAID 1 Mirror Copies and Witness Components were just absent. But that is just the way how vSAN works. Most importantly these missing components are not influencing the availability of your vSAN Datastore. In my case my SQL Server workload was not impacted in any way. It was still running without any interruption!

Removing the Host from the vSAN Cluster

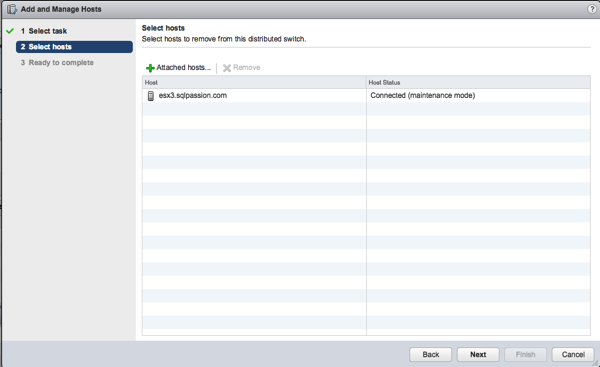

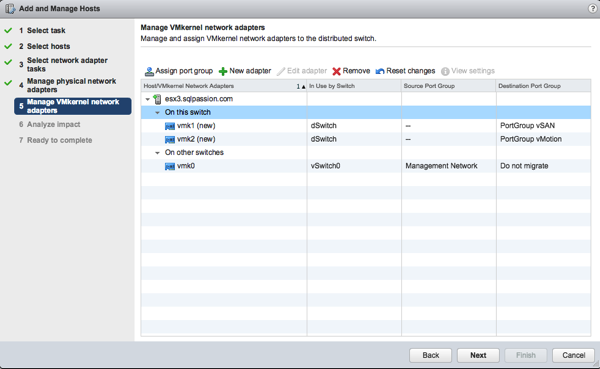

I’m running in my Home Lab the Management Network across a Standard Switch, and the Port Groups for vSAN and vMotion Traffic are managed through a Distributed Switch. Therefore I had to remove in the first step esx3.sqlpassion.com from the Distributed Switch. This was a quite simple task as you can see it from the following picture.

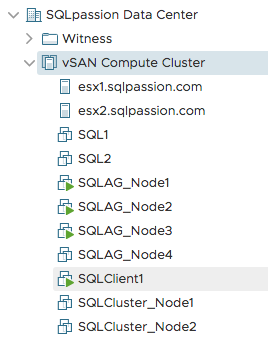

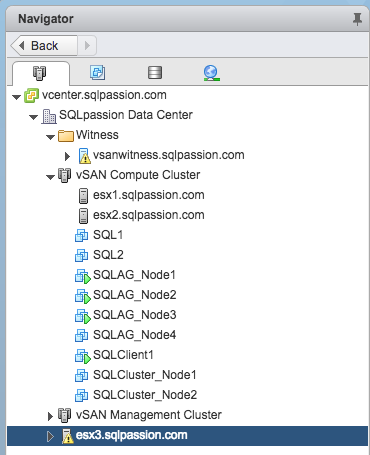

After esx3.sqlpassion.com was removed from the Distributed Switch, I finally removed the ESXi Host from the vSAN Cluster.

As you can see from the previous picture, the Host is now finally removed from the vSAN enabled Cluster, and my Virtual Machines are still running – without any interruption! Afterwards I have powered off esx3.sqlpassion.com and removed it physically from the Server Rack:

Remove and install the new Server into the Server Rack

After the removal of the old HP DL180 G6 Server from the Server Rack I have installed the used hardware components (Caching Disk, Capacity Disks, 10 GbE NIC) into the new HP DL380 G8 Server, and moved the new HP Server back into the Server Rack.

I’m currently running ESXi 6.5 Update 1 in my Home Lab, so I have also installed that ESXi version on the newly added server.

Configuring the new ESXi Host for vSAN

After the new installed ESXi Host was again reachable within my Home Network, I have added the Host into my vSphere Inventory, but OUTSIDE of the vSAN Compute Cluster.

Afterwards I have added the new ESXi Host to the Distributed Switch, and I have configured the vSAN and vMotion Port Groups accordingly.

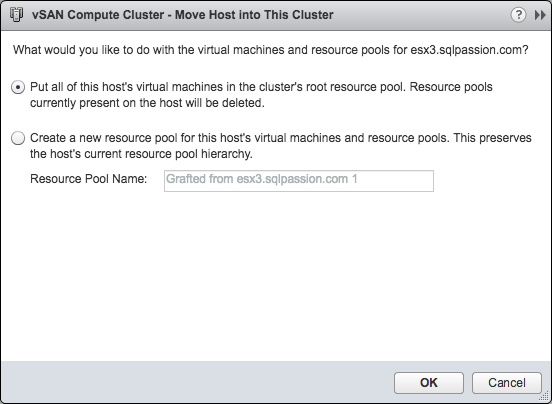

And finally I was able to move the ESXi Host into the vSAN Compute Cluster.

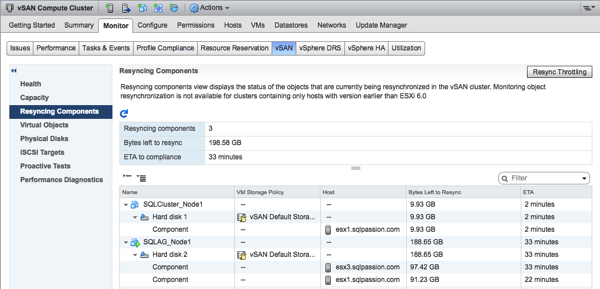

Resynchronizing the vSAN Datastore

As soon as the Caching and Capacity Disks from the old HP DL180 G6 Server were recognized by vSAN, it started automatically the resynchronization of the individual components in the vSAN Datastore. As I have said previously, I was running an active workload during the replacement of the host. Therefore the changes which were generated during the host absense also have to be applied to the added host. As you can see from the following picture I had around 200 GB across 3 components to resynchronize.

And after the resynchronization was successfully done, all my vSAN Datastore Components were in the Active State, and they were again compliant to the FTT Policy of 1 – without ANY downtime and any data loss!

Summary

As you have seen from this blog posting, it’s not a big deal to replace a vSAN Host without any downtime and any data loss during normal operations. In my case of course, the vSAN Datastore Components were temporarily not compliant to my chosen FTT Policy of 1. So I have lost for some time the high availability of my vSAN Datastore. If in the mean time another host would have crashed, I also would have had a data loss! This is very important to know.

In addition to the replacement of esx3.sqlpassion.com, I have also upgraded the RAM configuration in each of my 3 vSAN Hosts. I have now in each host 256 GB RAM – so in sum 768 GB in the whole vSAN Cluster 🙂

Thanks for your time,

-Klaus

1 thought on “Replacing an ESXi Host in a vSAN Cluster”

Congratulations for the successful host replacement. Good job!

And thank you for the great summary!