If you follow me on Twitter, or if you have read my last blog posting about my serious VMware Home Lab deployment, you might know that I really love and work with VMware vSAN. But what is VMware vSAN exactly, and can you use it to run SQL Server workloads on it? These are questions that I want to clarify with today’s blog posting.

VMware vSAN Overview

vSAN is the Software Defined Storage solution from VMware which is tightly integrated into VMware vSphere. Together with ESXi and the Software Defined Networking solution VMware NSX, VMware provides you a Software Defined Data Center (SDDC) solution. With these 3 components you can achieve a so-called Hyperconverged Infrastructure (HCI), where everything is tightly integrated into the VMkernel for the best possible performance. Instead of using real expensive hardware solutions (like big hardware-based SANs), everything within your Data Center can be virtualized – on traditional x86 hosts.

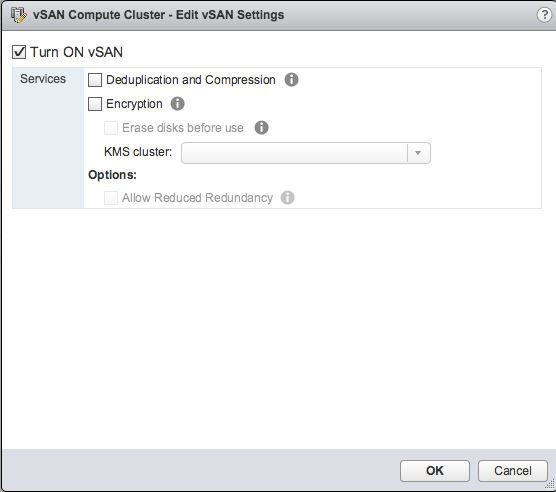

As you know from my previous blog posting, I’m using in my VMware vSAN based Home Lab 3 Dual Socket HP Servers without any expensive hardware based SAN solution. The whole storage is virtualized and abstracted through VMware vSAN. If you have designed the underlying hardware in the correct way, it’s quite easy to enable VMware vSAN. It’s just a simple checkbox within your vSphere Cluster:

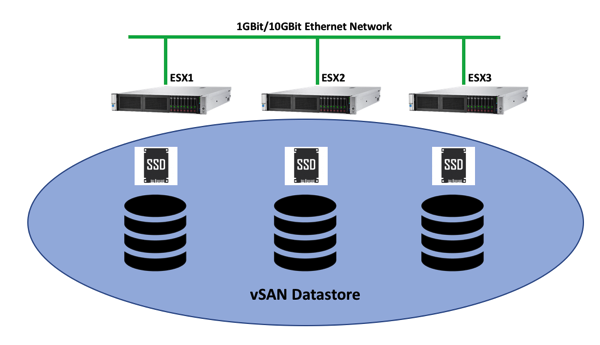

To be able to run VMware vSAN, you must have at least 3 physical hosts where ESXi (together with vSphere) is running. Since the latest release of vSAN it is also possible to deploy vSAN with only 2 physical hosts. Such a deployment is called ROBO – Remote Office/Branch Office. I will blog about that specific deployment and its requirements in an upcoming blog posting. From a scalability perspective you can have up to 64 physical hosts in a vSAN cluster. The following picture gives you an architectural overview about this.

As soon as you have enabled vSAN on your cluster, all the available storage from the individual hosts is combined into one large Datastore where you can deploy your Virtual Machines with different performance characteristics – based on so-called vSAN Storage Policies. I will talk more about these policies in the next section.

To create a vSAN based Datastore, vSAN uses an abstraction called a Disk Group. Each host which contributes storage to your vSAN Datastore must have a least one Disk Group. The Disk Group is used to combine multiple (physical) disks into a logical unit, which is used across the vSAN Datastore. Within a Disk Group you can have 2 different types of disks:

- Caching Disks

- Capacity Disks

For each Disk Group you need at least 1 Caching Disk and 1 Capacity Disk. In 1 Disk Group you can have up to 7 Capacity Disks. And on each physical host you can have up to 5 Disk Groups. Therefore you can have as a maximum 5 Caching Disks (5 x 1) and 35 Capacity Disks (5 x 7). But what is the idea behind a Caching and a Capacity Disk? With the initial release of VMware vSAN, it has provided you only a so-called Hybrid Configuration:

- The Caching Disk must have been an SSD/NVMe drive

- The Capacity Disks must have been traditional HDD drives

The latest release of VMware vSAN also offers you a so-called All Flash Configuration, where the Capacity Disks can be also SSD drives. In the Hybrid Configuration the Caching Disk was used as a Caching Tier. VMware vSAN has used 70% of the Caching Disk as a Read Cache, and the remaining 30% as a Write Cache. With an All Flash Configuration that behavior changed, and the Caching Disk is now used entirely for the Write Cache, and all the Reads are directly served from the SSD based Capacity Disks.

As you will see later when we talk about vSAN Policies, your Virtual Machine files can reside on different physical hosts as your compute (CPU) and memory resources (RAM). A vSAN Datastore is therefore stored in a distributed way across all the vSAN Cluster Nodes. And based on that explanation it is self-mandatory that you also need a reliable and fast network between all your vSAN Cluster Nodes. For the Hybrid Configuration you need to have at least a 1GBit network link, and for the All Flash Configuration you need at least a 10GBit network link to satisfy the vSAN requirements. In my Home Lab deployment I’m running an All Flash Configuration, and therefore the vSAN Cluster Nodes are also connected through a dedicated 10GBit Ethernet network to each other.

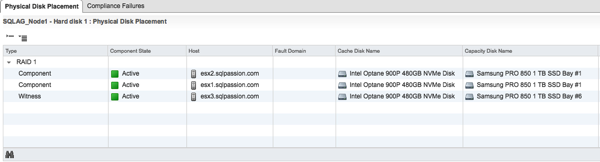

I’m using the Intel Optane 900P 480GB NVMe Drive as a Caching Disk and the Samsung 850 Pro SSD Drive as Capacity Disks on each vSAN Cluster Node (these are only consumer grade disks, which are NOT on the VMware compatibility list!).

VMware vSAN Policies

By now you should have a good understanding about the high-level architecture of VMware vSAN and what hardware components are involved in it. As I have said in the previous section, the data that you store on a vSAN Datastore is stored distributed across the various vSAN Cluster Nodes. But the real question is now how is the data distributed across the nodes? Well, the actual distribution scheme depends on the chosen vSAN Policy.

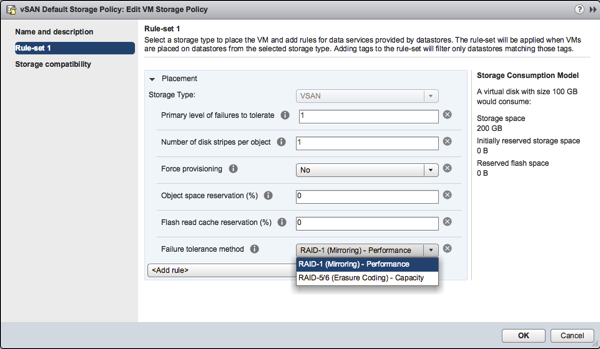

The vSAN Policy defines the performance and the protection that you want to have for your Virtual Machine files. More or less you can decide between 2 “extremes”:

- Performance

- Capacity

When you choose Performance for the Failure Tolerance Method, then VMware vSAN is performing a simple RAID 1 configuration (Mirroring) across the vSAN Cluster Nodes. To be able to choose Performance for the Failure Tolerance Method, you need at least 3 vSAN Cluster Nodes. With 3 nodes available you have a Failure To Tolerate of 1 (FTT = 1). Therefore the data of your Virtual Machine is mirrored across 2 vSAN Cluster Nodes, and the third node acts as a Witness to avoid a Split-Brain scenario, if you have a network partition problem.

The advantages of a RAID 1 configuration is a great write performance, because you have to write out the data only 2 times to achieve a high redundancy. As a drawback you need the double amount of storage space, because everything is stored 2 times.

And therefore you can choose in an All Flash Configuration the Failure Tolerance Method Capacity, where VMware vSAN uses in the background a RAID 5/6 configuration for the storage of your data. To be able to use Capacity as the Failure Tolerance Method you need at least 4 vSAN Cluster Nodes. In that case your Virtual Machine data is striped across the 4 nodes, and each individual node also stores Parity Information with which you can reconstruct lost data in the case of a failure. Checkout my blog posting about the Inner Workings of RAID 5 for more information about it.

The advantage of a RAID 5/6 configuration is that you need less storage. But on the other hand you have the drawback that writing out the parity information is much more work as when you do a simple RAID 1 mirroring. Therefore I don’t really recommend to use a Failure Tolerance Method of Capacity when you need the best possible performance from your vSAN Datastore.

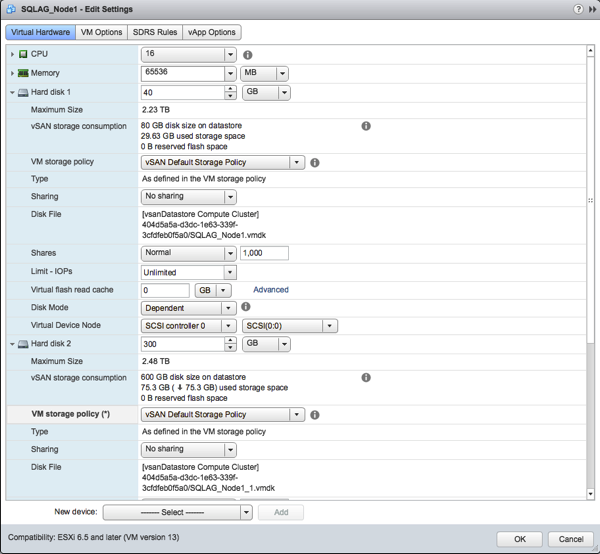

How can you now attach a vSAN Policy to a Virtual Machine? This is not really possible, because a vSAN Policy is attached to an individual vmdk file. And that’s really great! You don’t really need a dedicated RAID 1 and RAID 5/6 LUN as you have done it in the past with traditional hardware based SAN solutions. You just attach the needed vSAN Policy to your vmdk file, and vSAN takes care of it.

If the vSAN Policy states a RAID 1 configuration, vSAN will mirror your vmdk file across multiple vSAN Cluster Nodes. And if your vSAN Policy states a RAID 5/6 configuration, your vmdk file will be striped across multiple vSAN Cluster Nodes with additional parity information. This is just awesome!

You can just reuse your existing vSAN Datastore for different performance and high availability options – without working with different Datastores. This makes your job as an administrator much easier and you can concentrate on more important things in your life.

Summary

With this blog posting I wanted to give you a general overview about VMware vSAN, and why that Software Defined Storage solution is so powerful. As you have seen vSAN is very dependent on fast SSD storage – especially in an All Flash Configuration – which is the recommended configuration these days. But the real power of vSAN lies in their vSAN Policies that you can attach to an individual vmdk file. With the vSAN Policy you define how much availability and performance you want to have for your file. It’s as easy as A, B, C.

If you want to know more about how to Design, Deploy, and Optimize SQL Server on VMware – and especially how to run SQL Server on VMware vSAN, make sure to have a look on my webinar that I’m running from May 7 – 9 this year.

Thanks for your time,

-Klaus

4 thoughts on “What is VMware vSAN?”

Hey Klaus,

Thank you for sharing! Very cool lab

“I’m using the Intel Optane 900P 480GB NVMe Drive as a Capacity Disk and the Samsung 850 Pro SSD Drive as Capacity Disks on each vSAN Cluster Node”

Did you mean to say Caching Disk for Intel Optane 900P 480GB NVMe Drive. Because in the previous post where you described your lab, you mentioned — “I’m using the Intel Optane 900P 480 GB MVMe Disk for the Caching Tier, and currently 1 Samsung PRO 850 1 TB SSD for the Capacity Tier.”

Looking forward to your new blogs.

Thanks,

Denis

Hello Denis,

Thanks for your comment.

It was a typo – of course I’m using the Intel Optane as a Caching Disk

Thanks,

-Klaus

Klaus, when you may, give a try to StarWind VirtualSan, it has 2 very interesting features VMware does not have:

1) level-0 cache implemented in (redundant) RAM

2) LSFS (Log Structured File System) which sort in RAM random writes before to send it to underlying disks

I suppose there would be advantage to setting max MTU size to your dedicated network NIC and switch configuration? Not certain how that’s negotiated with the current 10GB standards