You know all the best practices how to choose a Clustered Key value? A good Clustered Key value should have the following 3 properties:

- Narrow

- Static

- Ever Increasing

Let’s have a more detailed look on all 3 properties, and why an ever increasing value doesn’t really scale in SQL Server.

Narrow

A Clustered Key value should be as small as possible. Why? Because it takes space, and the Clustered Key Value also serves a logical pointer in the leaf level in every Non-Clustered Index. If you have a very wide Clustered Key value, you also deal with larger Non-Clustered Indexes. If you have defined a Non-Unique Non-Clustered Index (which is normally the case), the Clustered Key value is also part of the navigation structure of your Non-Clustered Index. Therefore the overhead in your index gets very large. And our goal is to minimize overhead on our index pages. Overhead that we have to pay in the physical storage, and also in the Buffer Pool, where SQL Server caches the read index pages from storage.

Normally you choose a technical key value (like INT/BIGINT data type) instead of a natural key value. I have already seen over the years Clustered Key value lengths of 100 bytes and more (combinations of LastName, FirstName, SocialSecurityNumber, etc.). Believe me – you are just waisting memory! You don’t have to do that. Choose a technical key, and you are fine.

Static

Because the Clustered Key value is replicated in every Non-Clustered Index, your Clustered Key value should never ever change! Otherwise SQL Server has to maintain transparently in your UPDATE Execution Plan EVERY Non-Clustered Index that you have defined on your table. You are again just introducing additional computing overhead that you don’t need. Use your CPU cycles for more other important stuff. As you know, natural key values can change (like a LastName column, when you get married).

A technical key value (like an INT IDENTITY) can’t change (by default). Therefore the logical pointer (in the form of the Clustered Key value) in all your Non-Clustered Indexes remains stable – without any need to change them – forever!

Ever Increasing

And the 3rd final important property of a “good” Clustered Key value is that the chosen column should give you ever-increasing values. Why? Because you are always adding additional records at the end of your Clustered Index, and therefore you are avoiding expensive Page Splits (regarding CPU cycles, and Transaction Log overhead) and Index Fragmentation. In 99% of all cases you will be fine with an ever-increasing value like an INT IDENTITY column, but there are some scenarios, where this approach can lead to serious scalability problems. Imagine you have a workload, where a lot of different users are inserting permanently into the same table with an ever-increasing Clustered Key value. Just think a second about about Logging/Auditing tables.

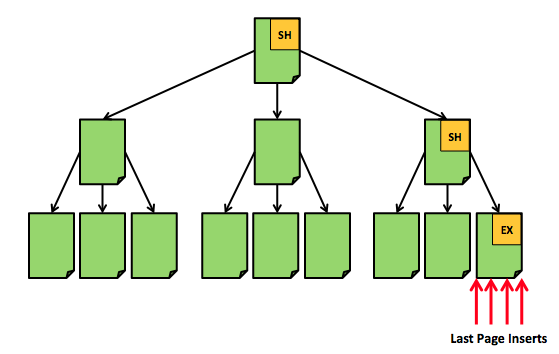

Let’s have a more detailed look on what happens internally in SQL Server, when you reading and writing pages in memory. When SQL Server accesses certain memory structures (like pages that are stored in the Buffer Pool), these memory accesses must be synchronized among multiple threads. You can’t write concurrently to the same page in memory. When a thread writes to a page, some other thread can’t read the page at the same time. In traditional concurrent programming you solve that problem with Mutexes – like a Critical Section. Certain code paths are just mutually exclusive. A Latch in SQL Server is almost the same as a Critical Section. And latches are used to synchronize threads/queries among each other. Every time when you read a page, the worker thread has to acquire a Shared Latch (SH), every time when you write a page, the worker thread has to acquire an Exclusive Latch (EX). And both latches are incompatible to each other.

When you now perform an INSERT statement, the worker thread exclusively latches the page where the INSERT statement occurs. In the mean time no one else can read and write from/to this page. With an ever-increasing Clustered Key value this approach doesn’t really scale, because you are just inserting your records at the end of your Clustered Index. Therefore your parallel threads/queries are contending about an Exclusive Latch on the same last page in your Clustered Index. As a side-effect SQL Server executes your INSERT statement serially – one INSERT after the next one. You have hit the famous Last Page Insert Latch Contention. Let’s have a look at the following picture.

With the best practice of an ever-increasing Clustered Key value you have a single hotspot at the end of your Clustered Key. The smaller your records are, the more contention you are introducing here. How can you solve that problem? Easy: spread your INSERT statements across the whole B-Tree structure of the Clustered Index. There are various approaches how you can achieve that:

- Use a random Clustered Key value (like a UNIQUEIDENTIFIER). But be aware of the side-effects: larger logical pointer in EVERY Non-Clustered Index, Page Splits…)

- Implement Hash Partitioning, if you are using the Enterprise Edition of SQL Server.

- Eliminate latching through the use of In-Memory OLTP, that is part of SQL Server 2014.

- Use a so-called Reverse Index. Unfortunately SQL Server doesn’t provide you that kind of index out-of-the box, like Oracle. But you can implement it at your own…

Summary

At 99% you will be fine with a narrow, static, and ever-increasing Clustered Key value like an INT IDENTITY data type. But in some rare scenarios where you need a huge amount of parallel INSERT statements (like Logging/Auditing tables), you can hit the Last Page Insert Latch Contention with that approach. If you hit that specific problem, you have to leave your comfort zone, and you have to make sure that you spread the INSERT statements across your whole B-Tree structure. You are mainly fighting against a limitation of how multi-threaded access happens in a traditional B-Tree structure.

I hope that I have given you with that blog posting a good insight, why ever-increasing Clustered Key values can hurt the scalability of your tables.

If you are more interested in how to choose the right Clustered Key Value, I’m also offering a 1-hour long training video through the SQLpassion Online Academy.

Thanks for reading!

-Klaus

20 thoughts on “A ever-increasing Clustered Key value doesn’t scale!”

For random but narrow BIGINT values for this scenario, very little beats creating a GUID then hashing it with FNV1a, to a bigint.

Hello Mark,

There are many approaches how to get a random key value.

Hashing of course is also an option 🙂

Thanks for your comment.

-Klaus

Using a cheap hash like FNV1a (or as I like to do: MurmurHash) is a really good idea Mark. One thing you might try is to bit fiddle a bit with the hash like this (pseudocode as SQL server lacks bitshift):

DECLARE @guid = NEWSEQUENTIALGUID()

DECLARE @hash BIGINT = FNV1a(NEXT ID FROM SEQUENCE)

SET @hash = @hash & 0x00FFFFFFFFFFFFFF | (@guid 0x00000000000000FF << 56)

Basically, take the sequential part of the GUID and move it to the topmost byte (or bytes). This gives you the benefits of relatively sequential keys while maintaining a good spread of values.

And Hekaton solves this contention problem how?

🙂

Hello Jim,

Hekaton uses Hashtables, and Bw-Tree structures internally, which are latch-free. Therefore this problem doesn’t exist.

Thanks

-Klaus

Bw trees and hash tables have their own problems. Try to run an UPDATE heavy workload (like TPC-C) that has a lot of UPDATE contention on a few rows in a single table (like WAREHOUSE)

Nice article, Klaus.

However, I wish you’d put the first line of the Summary is an font at least as big as the header, because that’s the clause my devs will suffer a blind spot 😉

Haha 😉

I was told 5 or 6 years ago (by more than one CAT team guys) that they optimized the SQL code to accomodate this hot spot and scale very well – the complete opposite of what you’re saying. SQL 2000 and possibly 2005 had the hot spot problem, but a service pack took care of it. And in reality in production my servers perform very well with it.

Hello Dennis,

Thanks for your comment.

No, that code can’t be optimized in any way, because it’s just a technical limitation of the B-Tree structure, and how the synchronization has to be handled between multiple reader/writer threads.

It’s also very easy to reproduce: just insert across multiple queries (e.g. use ostress.exe) with an ever-increasing Clustered Key value. It will not scale!

-Klaus

A few things here:

1) no – this code has not been optimised. In fact, I wrote a blog entry on sqlcat.com (when it was online) describing exactly the issue. You can still read about it on the latch/spinlock paper

2) klaus – it is not actually the case that there is no way around this problem with B+ trees. There ARE ways to avoid this (Oracle has solved it) but fixing it is a very intrusive and risky code change.

Hello Thomas,

Thanks for your comment, and the clarification that there are ways to avoid that specific problem.

-Klaus

Hi Klaus/Thomas,

I’m interested in the recommendation to use MSSS2K14 to eliminate latching that creates hotspots for clustered indexes on sequential page writes. BTW – Try adding an INSERT trigger to your page writes if you really want to see hot spots with your sequential writes.

Is latch elimination the byproduct of a multi-processor/flash drive implementation or are there architectural advantages here to traditional hardware as well? For instance, performance issues associated with flash drive erase cycles is reduced using sequential writes but that issue is unique to flash storage. Also, do you see programmable/configurable opportunities in Hekaton to optimize sequential write performance or to avoid update contention in the cache layer that Thomas mentioned? Or, will it be strictly a matter of trying to leverage kernel advances with an effective database structure?

David

Hi Klaus

Thanks for a very informative post. This is something you mentioned at SQL Relay in Bristol but I didn’t get chance to ask then how you spot the problem; what specific indicators are there that this is an issue and how can it be proved?

Thanks in advance

Martyn

Hello Martyn,

Thanks for your comment.

In one of my upcoming blog postings I will show how to reproduce, identify, and finally resolve latch contention.

Thanks

-Klaus

Identity column is ideal as a clustering key for static, rarely updated tables – reference tables.

For tables that are constantly growing and updated the ideal clustering key is a composite one with identity column is a last one that makes it unique. Other columns in the composite clustering key should be columns that cover most of searches / queries. For example, Orders should have Customer as first column in the composite key and identity (order number) as a last one. Having such clustering key eliminates hot spot on insert and keeps all customer related tables physically together. Having unique index on identity column also allows direct access to the data.

Memory, speed and storage today is not an issue anymore. Saying that I’ve seen plenty of cases when a superb hardware stays useless because of bad clustering indexes design.

Dear sir- In order to avoid last page insert contention – I have seen your video for possible solutions to it. Just want to confirm whether enabling the TF 1118 is also a solution for this. Thanks in advance.

Sir- got the answer. please ignore. Thanks as always.

Hi Klaus,

I read the hot spot contention is a myth, they fixed after SQL 2005. Is that correct?

https://stackoverflow.com/questions/5302540/avoid-creating-a-clustered-index-based-on-an-incrementing-key

Hello,

Thanks for your comment.

This problem can’t be fixed, because it’s just by design in current RDBMS implementations.

Please check out the following blog posting for more details about it: https://www.sqlpassion.at/archive/2014/04/15/an-ever-increasing-clustered-key-value-doesnt-scale/

Thanks,

-Klaus