A few weeks ago, I blogged about Latches and Spinlocks in SQL Server. Both synchronization primitives are used to protect shared data structures within SQL Server, like pages in the Buffer Pool (through Latches) or locks in the Lock Manager’s hashtable (through a Spinlock). A completely new synchronization paradigm that you will see more and more is so-called Lock-Free Data Structures. That’s also one of the foundations on which In-Memory OLTP in SQL Server 2014 is built. Therefore I want to give you in today’s blog posting a quick overview of and a general introduction to Lock-Free Data Structures and what they have to offer.

What are Lock-Free Data Structures?

A lock free algorithm protects a shared data structure through a non-blocking algorithm. As you have already seen in the previous blog postings about Latches and Spinlocks, other threads are blocked when they can’t acquire a Latch or a Spinlock. When a thread waits for a Latch, SQL Server puts the thread into the SUSPENDED state, if a thread waits for a Spinlock, the thread has to spin actively on the CPU. Both approaches lead to a blocking scenario, which we want to avoid through a Non-Blocking Algorithm. Wikipedia has a really nice explanation of Non-Blocking Algorithms:

“A non-blocking algorithm ensures that threads competing for a shared resource do not have their execution indefinitely postponed by mutual exclusion. A non-blocking algorithm is lock-free if there is guaranteed system-wide progress regardless of scheduling.”

The most important point to pick up from this explanation is that a thread will not be blocked by another thread. This is possible, because no traditional locks are used for the thread synchronization itself. Let’s have a more detailed look at a concrete example.

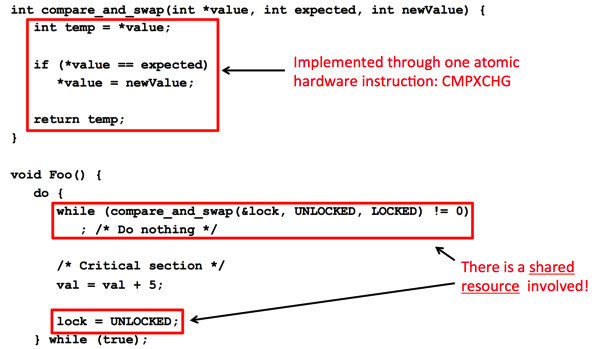

Let’s go through this code step-by-step. First of all, the implementation of the function compare_and_swap is implemented through one atomic hardware instruction directly on the CPU level – CMPXCHG. I just wanted to illustrate what logic is implemented in CMPXCHG: you compare a value to an expected value, and if they are the same, the old value is set to a new value. Because the whole logic of CMPXCHG is implemented as one atomic unit on the CPU itself, no other thread can interrupt the execution of this assembly opcode.

To store the state of the spinlock itself, a variable with the name lock is used. Therefore a thread has to spin in the while loop until the spinlock synchronization variable is unlocked. If this happens the thread can lock the synchronization variable, and can finally enter the critical section in a thread-safe manner. This is again just a simplified (not thread-safe!) illustration of a Spinlock – things are a little bit harder and more complicated in reality.

The largest problem with this traditional approach is that there is a shared resource involved in the thread synchronization: the spinlock synchronization variable lock. If one thread holds the spinlock, and gets suspended, all the other threads get stuck in the while loop when they try to acquire the spinlock. You can avoid this problem by introducing a lock free coding technique.

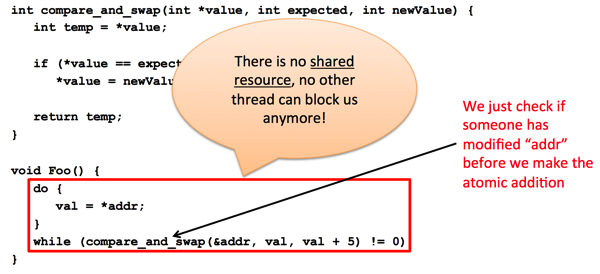

As you can see, the implementation of the method Foo has completely changed. Instead of trying to acquire a spinlock, the implementation just checks if some other thread has modified the shared variable (earlier protected through a Spinlock), before the atomic addition is performed. This means that there is no shared resource used anymore, and threads are not blocking each other anymore. That’s the main idea of Lock-Free Data Structures and Non-Blocking Algorithms.

In-Memory OLTP in SQL Server 2014 uses the same approach to install page changes in the mapping table of the Bw-Tree. Therefore there is no locking, latching, and spinlocking involved. If In-Memory OLTP “sees” that the address of a page in the mapping table has changed, it means that another thread has started a modification on that page – but hasn’t completed it yet (some other thread was scheduled in the meantime on the CPU). The individual threads in In-Memory OLTP are working together in a cooperative fashion. Therefore it is possible that the thread that has seen the modification in the mapping table just completes the “pending” operation – like a page split.

A page split in In-Memory OLTP consists of multiple atomic operations. Therefore one thread can begin a page split, and another thread can finally finish this page split. In a future blog posting I will also talk a little bit more about these types of page splits, and which changes are implemented in the Bw-Tree to make this sophisticated approach possible.

Summary

In today’s blog posting I have introduced the main idea behind Lock-Free Data Structures to you. The main idea is to check if other threads have already done an operation before itself trying to execute an atomic operation. Therefore there is no longer any need to protect a critical section through a synchronization primitive like a spinlock. And the idea of Lock-Free Data Structures and Non-Blocking Algorithms is also used by In-Memory OLTP that was first introduced with SQL Server 2014.

Thanks for reading and your time,

-Klaus